Search engines are sophisticated technologies capable of processing data at a break-neck pace and serving up relevant answers — So, why does it matter how search engines work?

Well, consider the impact Google has on your website. Understanding how the search engine crawls, classifies, and decides which content is relevant is critical if you want to improve your search rankings and by extension, drive traffic to your site and increase sales.

In this article, I’ll go over the basic inner workings of the search engines to help you understand all of the elements at play when it comes to ranking your content–or burying it a few pages in.

What You’ll Learn:

How Do Search Engines Work?

Before we dig into the details, let’s first define the term “search engine.” A search engine is a web-based software that allows searchers to locate information on the internet.

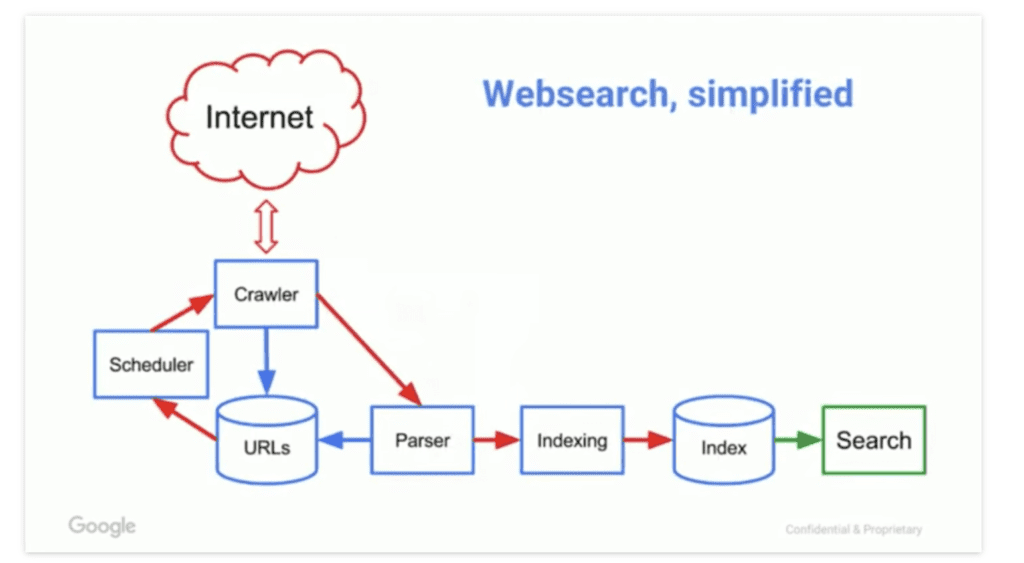

Most search engines operate on a three-stage process: crawlers comb through the web to discover updates and new information. That information is collected, categorized, and indexed to build a database of information, which algorithms rank based on relevance anytime someone enters a search query.

How do search engines work?

Search engines do three key things: crawl, index, and rank. Here’s a look at how search engines work, step by step–and how to optimize for each step:

1. Crawling

Crawlers, also known as bots or spiders, are computer programs designed to automatically search through webpages to understand and organize content to build an index.

Search engines kick off the crawling process by taking a list of known URLs from previous crawls and user-submitted sitemaps, which go to a scheduler that determines when to crawl each link.

From there, the Googlebot crawlers visit those known websites and rely on the links within those sites to discover new pages. The software is programmed to pay special attention to new websites, dead links, and updates to existing sites.

The crawler’s primary role is to locate new data and collect “notes” about what each page is about, what it’s for, and who might find it useful. Crawled pages then go a parser, where critical data is extracted, then indexed. Parsed links are added to the “list,” where the scheduler determines when to crawl and recrawl those new pages.

2. Indexing

After a crawler finds a page, the search engine renders it just like a browser would. During that process, the search engine analyzes the content and stores it in a database known as an index. The index stores data about each URL, as well as the following pieces of information:

- Keywords and topics–What concepts does this page cover?

- Schema–What type of content was crawled? Microdata, known as schema allows website owners to mark-up specific parts of a webpage to indicate specific features and content types. (For more direction, this article covers which markups work best by industry).

- Freshness–in other words, when was the last time this page was updated?

- Engagement–How often do people interact with this page? And how they interact with the various on-page elements?

3. Serving & Ranking

At this stage, the search engine has collected website data through crawling and organized those findings via indexing. The next stage is serving and ranking web pages so that users receive the most relevant information possible.

When a user enters a search query, it’s the search engine’s job to find the most relevant answer from the index based on several key factors.

I’ll get to those factors in a moment, but the basic idea here is, Google aims to deliver the highest-quality results, then factors in other considerations like location, device, language, search history, and intent to further improve the user experience.

How Do Search Engine Algorithms Work?

Finding and indexing websites is just the beginning. Search engines index millions, or in the case of Google, billions of pages, and as a result, rely on algorithms to deliver and rank the most relevant results.

Beyond the basics, there are also some things you should know about the algorithm and how it determines what is good, relevant, timely, and so on.

RankBrain

RankBrain is a machine-learning algorithm introduced in 2014, designed to help Google process search results. The algorithm performs two main tasks in understanding search queries and measuring user satisfaction.

Before RankBrain, Google would scan the index for the exact keyword someone typed into the search bar. The problem there was, Google had never seen 15% of those keywords.

Today, RankBrain doesn’t just match keywords, it tries to determine what searchers mean when they enter a specific query.

On the UX side, RankBrain aims to provide the results that best serve user needs.

It looks at the following areas to understand how searchers engage with the results:

- Dwell time

- Bounce rate

- Pogo-sticking (aka when a user clicks multiple results before finding one that answers their question).

- Click-through rates

From there, the algorithm adjusts rankings based on its findings.

For example, if RankBrain detects that a URL positioned toward the bottom of the page is delivering a better answer to users than higher-ranked results. The algorithm will reorder the rankings and place the more relevant content at the top of the page.

Or, if it notices a high number of bounces on a particular result, it might not be the most relevant answer to a particular query and will make changes based on those patterns.

RankBrain learns from user behavior and over time gets better at determining the intent behind search queries.

Rankbrain learns from users’ search engine queries Source

BERT

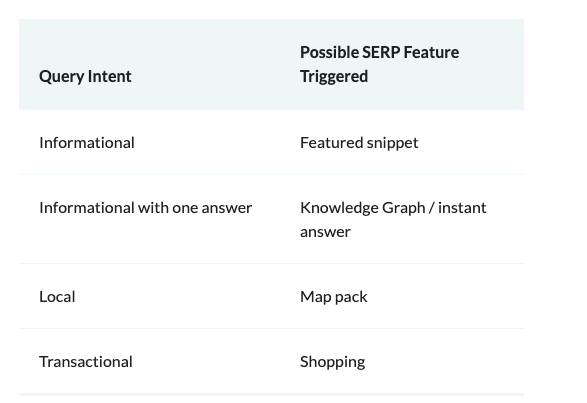

In late 2019, Google added a new algorithm to the mix to help analyze search queries. Like RankBrain, BERT is used to pick up on the nuances and context implied when searchers enter a query to deliver the most relevant content to the user. It is also used to determine which featured snippets and SERP features deliver the best possible answer.

BERT is additive to RankBrain’s existing capabilities and is used exclusively for queries, not on-page content.

Relevance

To determine webpage relevance, search engines look for signals that a page contains the information users are looking for using a combination of interaction data, keyword mentions, and other factors.

Here’s a look at the four areas Google uses to match queries to relevant content.

Style

Content style refers to the medium—text, image, or video. For most queries, one style tends to dominate the results. For instance, if I type in “content strategies for B2B marketing,” I’ll get a long list of blog posts on that topic:

Alternatively, if I type in something less specific like “hiking boots,” Google doesn’t have much to work with, and as a result, serves up shopping results, images, local retailers, and “best of” lists.

Format

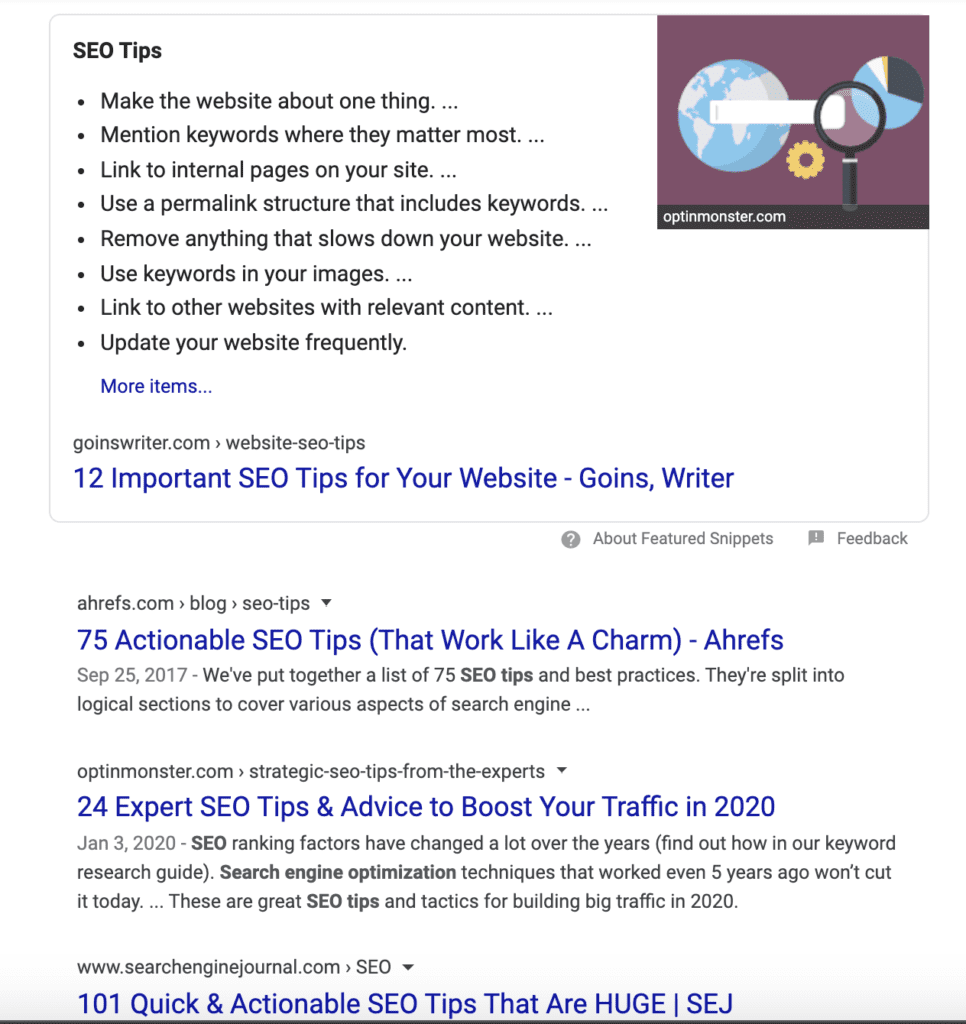

The algorithm also considers which format makes the most sense based on the query. For example, all of the top-ranking results for “SEO tips” are numbered lists.

Example of a search engine result page for “seo tips” search

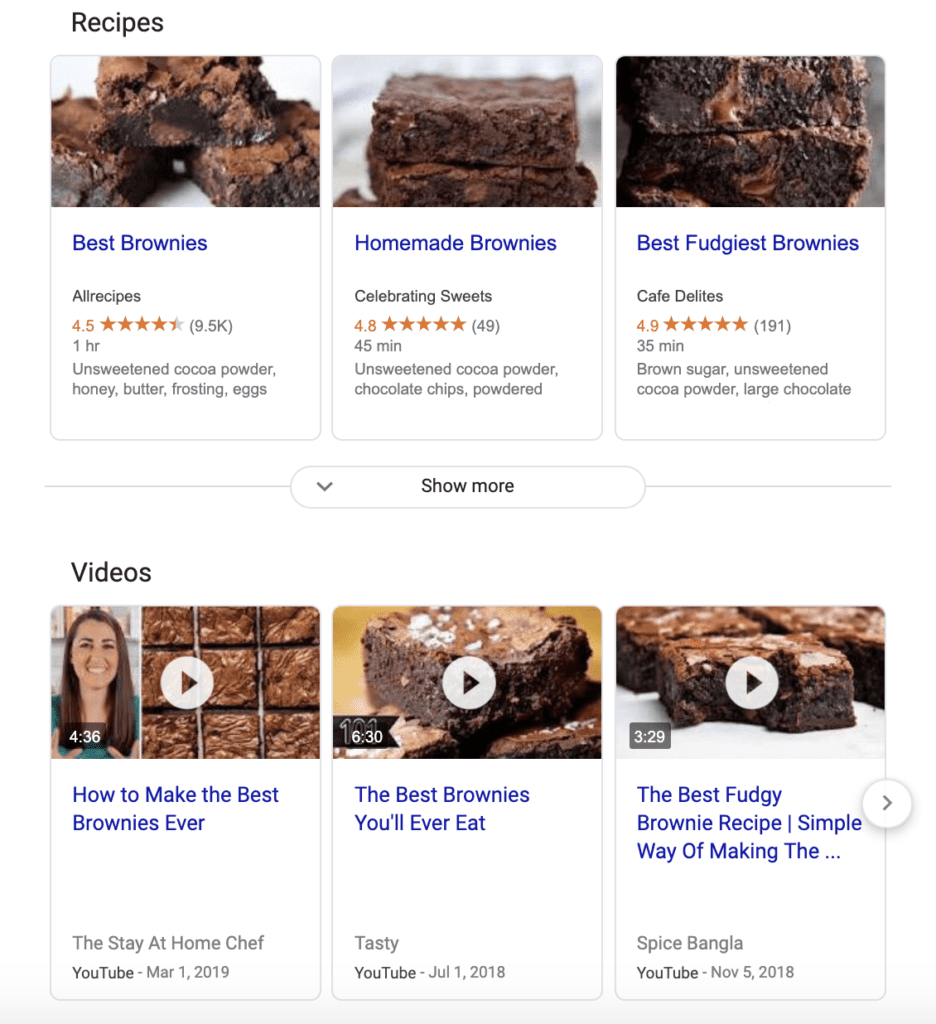

Whereas, if I type in “how to make brownies,” the top results are all recipes, not blog posts or news updates.

Search engine result featured snippet for brownie recipes

Type

The content type is used to identify the purpose of the content, sorting them into the following categories: product pages, category pages, blog posts, videos, and landing pages.

Again if I ask Google how to make brownies, the top results are informational—a mix of video and text-based recipes I can review to find the tastiest-sounding option.

In this case, it doesn’t make sense to serve up a category page where I can buy brownie mix—even though that would be one way of achieving my goal.

Angle

The content angle describes the key selling point of the content. With the brownies example, the dominant angle is recipes for brownies, with the SEO tips example, it’s a roundup of tips and tricks.

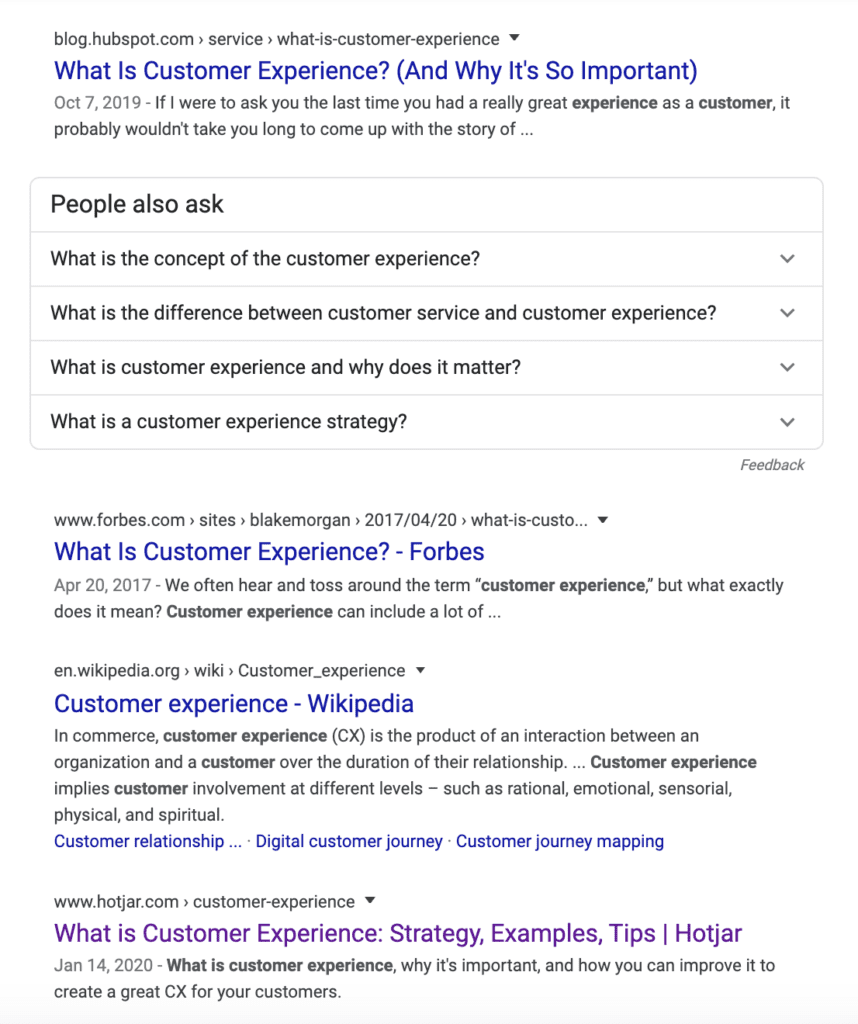

Now, if I type in something like “what is customer experience?” the dominant angle is introductory posts aimed at defining CX and providing examples.

Search engine result for “what is customer experience?” search

Context & Setting

According to Google, information about your search history, location, and search settings also factor in to creating a relevant experience for the user.

If you search for “best auto repair” or “pizza places open late” Google will serve up a list of results nearby, highlighting the top results in the local 3-pack and showcasing your options using the map results.

Freshness

Google’s Freshness algorithm is used to deliver results related to trending topics, news items, and anything that’s “happening right now.” For example, something like a search for movie times requires demands the latest results.

Content Quality

It’s in Google’s best interest to deliver high-quality content to its users. The challenge here is, quality content is hard to define. To combat this issue, the search engine uses a set of guidelines called, EAT to help its quality raters objectively separate the good from the bad.

EAT stands for expertise, authority, and trust, and in a nutshell, provides a framework for identifying (and for marketers, creating) content that accomplishes the following:

- Anticipates searcher needs and exceeds expectations.

- Provides comprehensive answers to questions.

- Is useful to the reader.

- Claims are backed by credible sources.

If you’d like to learn more, I go over EAT guidance in more detail, here.

Usability

Finally, the algorithm also looks for content that is easy to consume. Factors like proper formatting, mobile-friendliness, page speed, and accessibility also help determine ranking.

Wrapping Up

Learning the ins and outs of how search engines work is important because it sets the stage for a smarter SEO strategy. For example, understanding how Google defines “quality content” or “relevance” can help inform your approach to writing blog posts or optimizing your YouTube channel.

It also helps you understand how to format content for best results (i.e. why you might use H2s and H3s, add structured markup to your site, or take the time to optimize metadata).

While it’s unlikely that most of us will ever fully grasp the inner workings of the machine-learning and AI technology that powers the SERPs, hopefully, you now have a better sense of the “why” behind the SEO best practices we use every day.