Robots.txt, also known as the Robot Exclusion, is key in preventing search engine robots from crawling restricted areas of your site.

In this article, I’ll go over the basics of how to block URLs in robots.txt.

What We’ll Cover:

- What a robtos.txt file is

- Examples of how to use robots.txt

- When you should use it

- Getting started

- How to create a robots.txt file

- How to disallow a file

- How to save your robots.txt

- How to test your results

- FAQ

What is a Robots.txt File?

Robots.txt is a text file that webmasters create to teach robots how to crawl website pages and lets crawlers know whether to access a file or not.

While it does have the ability to lower your ranking on a SERP, its most important function is to avoid overloading your site with requests. Most people use a robots.txt file to tell search engine crawlers to avoid unimportant information so that it focuses more on important and up-to-date information on a site.

When crawlers visit your site, the first thing they look for is a robots.txt file. This file tells the crawler which content to crawl and index and which content should be avoided. Basically, it works by blocking the crawlers from certain content and redirecting it elsewhere.

You may want to block urls in robots.txt to keep Google from indexing private photos, expired special offers or other pages that you’re not ready for users to access. Using it to block a URL can help with SEO efforts.

It can solve issues with duplicate content (however there may be better ways to do this, which we will discuss later). When a robot begins crawling, they first check to see if a robots.txt file is in place that would prevent them from viewing certain pages.

Important Information About Robots.txt

When using a robots.txt file, be careful! If you install a robots.txt file incorrectly, you could inadvertently tell search engines to disallow all of your contentt. If this happens, your site will be removed from search engine results pages, having a negative effect on your traffic, conversions, and overall revenue.

However, if, for some reason, you do want to block Google from your website and remove it completely, use a noindex file, rather than a robots.txt file.

It’s also important to keep in mind that a robots.txt file will keep your website, images, videos, and audio files from appearing on the SERP but doesn’t stop other people from linking to your content or featuring it on their pages.

It’s also worth noting that not all crawlers will obey your command. While Googlebot and other top-name crawlers almost always will, other, lesser-known crawlers may not. If there is something on your site that you absolutely do not want to be exposed to, it’s better to put it behind a password-protected file or paywall rather than a robots.txt file.

Examples of How to Block URLs in Robots.txt

Here are a few examples of how to block URLs in robots.txt:

Block Everything

If you want to block all search engine robots from crawling parts of your website, you can add the following line in your robots.txt file:

User-agent: *

Disallow: /

Block a Specific URL

If you want to block a specific page or directory, you can do so by adding this line in your robots.txt file.

User-agent: *

Disallow: /private.html

If you want to block several URLs:

User-agent: *

Disallow: /private.html

Disallow: /special-offers.html

Disallow: /expired-offers.html

Block a Specific File Type

If you want to block a specific file type from being indexed, you can add this line to your robots.txt file:

User-agent: *Disallow: /*.html

Using a Canonical Tag

If you want to prevent search engine robots from indexing duplicate content, you can add the following canonical tag to the duplicate page.

rel=”canonical” href=”https://www.example.com/original-url”

Where Should I Upload My Robots.txt File?

Once you’ve downloaded and edited your Robots.txt file, it’s time to upload it to the root of your domain. Uploading your file depends on your platform or hosting site. Some services, such as Blogger or Wix, won’t let you access the file directly. If this is the case, explore your platform’s settings to see what options are available to you.

If you can edit your robots.txt file directly, you’ll want to save it with UTF-8 encoding after you’re finished editing.

Now it’s time to upload your robots.txt file to the root of your domain. Since this part is so dependent on your domain hosting site, there is no one correct way to do this. Check with your hosting provider to see your next steps.

Getting Started With Robots.txt

Before you start putting together the file, you’ll want to make sure that you don’t already have one in place. To find it, just add “/robots.txt” to the end of any domain name—www.examplesite.com/robots.txt. If you have one, you’ll see a file that contains a list of instructions. Otherwise, you’ll see a blank page.

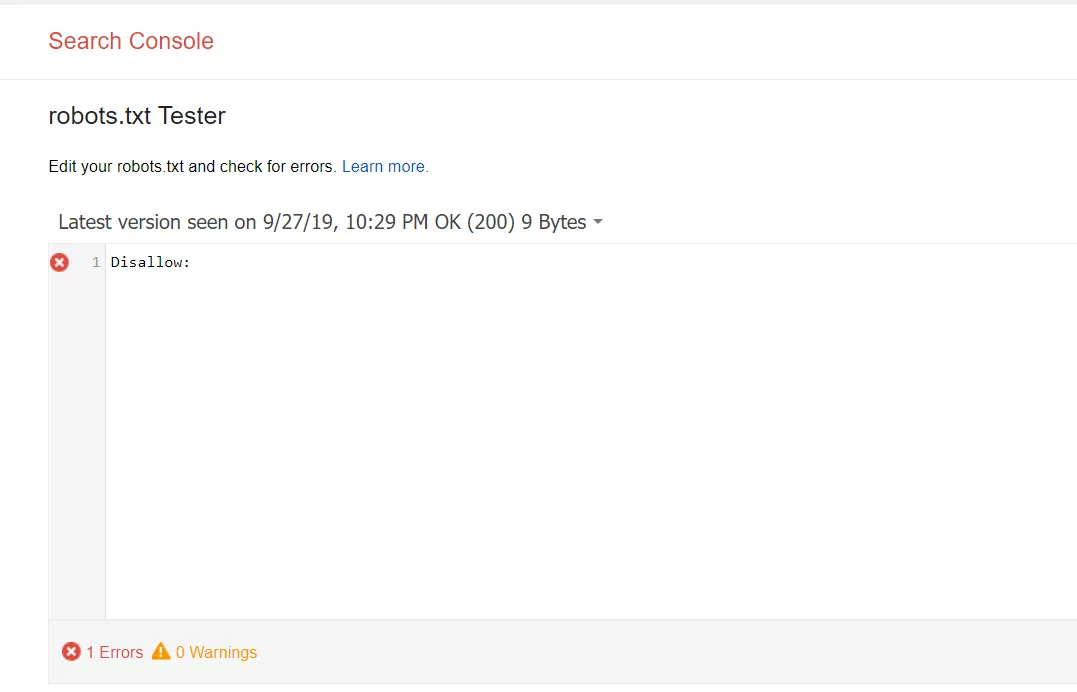

Next, Check if Any Important Files Are Being Blocking

Head over to your Google Search Console to see whether your file is blocking any important files. The robots.txt Tester will reveal whether your file is preventing Google’s crawlers from reaching certain parts of your website.

It’s also worth noting that you might not need a robots.txt file at all. If you have a relatively simple website and don’t need to block off specific pages for testing or to protect sensitive information, you’re fine without one. And—the tutorial stops here.

Setting Up Your Robots.Txt File

These files can be used in a variety of ways. However, their main benefit is that marketers can allow or disallow several pages at a time without having to access the code of each page manually.

All robots.txt files will result in one of the following outcomes:

- Full allow—all content can be crawled

- Full disallow—no content can be crawled. This means that you’re fully blocking Google’s crawlers from reaching any part of your website.

- Conditional allow—The rules outlined in the file determine which content is open for crawling and which is blocked. If you’re wondering how to disallow a url without blocking crawlers off from the whole site, this is it.

If you would like to set up a file, the process is actually quite simple and involves two elements: the “user-agent,” which is the robot the following URL block applies to, and “disallow,” which is the URL you want to block. These two lines are seen as one single entry in the file, meaning that you can have several entries in one file.

Formatting Your Robots.Txt File

Once you’ve determined which URLs you want to include in your robots.txt file, you’ll need to format it correctly.

When setting up your file, you should add the following components:

User-Agent

This is the robot that you want the following rules to apply to. It’s often written in the following format:

User-agent: [robot name]

The most common robots you’ll find here are Googlebot and Bingbot

Disallow

This is the part of the file where you’ll specify which URLs should be blocked. The syntax for this usually looks like:

Disallow: [URL or directory]

So, if you want to block the directory “/privacy-policy/”, you’ll want to add “/privacy-policy/” as your disallow entry.

You can also use wildcards in your robots.txt file, letting you block multiple URLs in one go.

Sitemap

The sitemap element is optional. However, search engines may take it as a sign that you’re trying to make sure your site is easily navigable and trustworthy. This can help your ranking in the SERPs.

Your sitemap entry should look something like this:

Sitemap: https://www.example.com/sitemap.xml

Once you have your file set up, all you have to do is save it as “robots.txt,” upload it to the root domain, and you’re done.

Your file will now be visible at https://[yoursite.com]/robots.txt.

Location

You should always place the robots.txt file in the root domain of your website.

Note that the address is case-sensitive, so you should not use capital letters. It should always start with an “/.”

For example, the file can be found at https://www.example.com/robots.txt, but not https://www.example.com/ROBOTS.TXT.

How to Block URLs in Robots.txt

For the user-agent line, you can list a specific bot (such as Googlebot) or can apply the URL txt block to all bots by using an asterisk. The following is an example of a user-agent blocking all bots.

User-agent: *

The second line in the entry, disallow, lists the specific pages you want to block. To block the entire site, use a forward slash. For all other entries, use a forward slash first and then list the page, directory, image, or file type

Disallow: / blocks the entire site.

Disallow: /bad-directory/ blocks both the directory and all of its contents.

Disallow: /secret.html blocks a page.

After making your user-agent and disallow selections, one of your entries may look like this:

User-agent: *

Disallow: /bad-directory/

View other example entries from Google Search Console.

How to Save Your File

- Save your file by copying it into a text file or notepad and saving as “robots.txt”.

- Be sure to save the file to the highest-level directory of your site and ensure that it is in the root domain with a name exactly matching “robots.txt”.

- Add your file to the top-level directory of your website’s code for simple crawling and indexing.

- Make sure that your code follows the correct structure: User-agent → Disallow → Allow → Host → Sitemap. This allows search engines to access pages in the correct order.

- Make all URLs you want to “Allow:” or “Disallow:” are placed on their own line. If several URLs appear on a single line, crawlers will have difficulties separating them and you may run into trouble.

- Always use lowercase it to save your file, as file names are case-sensitive and don’t include special characters.

- Create separate files for different subdomains. For example, “example.com” and “blog.example.com” each have individual files with their own set of directives.

- If you must leave comments, start a new line and preface the comment with the # character. The # lets crawlers know not to include that information in their directive.

How to Test Your Results

Test your results in your Google Search Console account to make sure that the bots are crawling the parts of the site you want and blocking the URLs you don’t want searchers to see.

- First, open the tester tool and take a look over your file to scan for any warnings or errors.

- Then, enter the URL of a page on your website into the box found at the bottom of the page.

- Then, select the user-agent you’d like to simulate from the dropdown menu.

- Click TEST.

- The TEST button should read either ACCEPTED or BLOCKED, which will indicate whether the file is blocked by crawlers or not.

- Edit the file, if needed, and test again.

- Remember, any changes you make inside GSC’s tester tool will not be saved to your website (it’s a simulation).

- If you’d like to save your changes, copy the new code to your website.

Keep in mind that this will only test the Googlebot and other Google-related user-agents. That said, using the tester is huge when it comes to SEO. See, if you do decide to use the file, it’s imperative that you set it up correctly. If there are any errors in your code, the Googlebot might not index your page—or you might inadvertently block important pages from the SERPs.

Finally, make sure you don’t use it as a substitute for real security measures. Passwords, firewalls, and encrypted data are better options when it comes to protecting your site from hackers, fraudsters, and prying eyes.

Robots.txt FAQs

1. Where should I save my robots.txt?

Your robots.txt should be saved in the root domain of your website. The address should be lowercase and start with an “/.” For example, the file can be found at https://www.example.com/robots.txt but not at https://www.example.com/ROBOTS.TXT.

2. Should I always use wildcards in my disallow lines?

Using wild cards won’t be always necessary, and can actually cause problems if you’re not careful. When listing specific URLs, you should always list them completely—even if you think a wildcard might work.

3. Can I use robots.txt to block bots from a specific image?

Yes, you can. To block a specific image, you can use the “Disallow” command and specify the file type of the image (e.g., “Disallow: /*.png”).

4. Does my robots.txt need to be perfect for it to work?

While it’s essential to ensure your robots.txt is accurate, minor errors shouldn’t keep your file from working. You can use the tester tool in Google Search Console to test your file.

5. What if I want to block specific bots?

You can use the “User-agent” line to specify which bots to block. For example, if you want to block all bots except for Googlebot, you can use this:

User-agent: *

Disallow: /

User-agent: Googlebot

Allow: /

6. What does disallow mean in a robot.txt?

Adding “disallow” to your robots.txt file will tell Google and other search engine crawlers that they are not allowed to access certain pages, files, or sections of your website, directing them to avoid that content. This will usually also result in your page not appearing on search engine results pages.

7. What does user agent * disallow mean?

Adding “user agent *” gives you the opportunity to disallow only some crawlers. For example, if you want Googlebot to still crawl your page but you don’t want Facebot (Facebook’s crawler) to do it, you would type “user-agent: Facebot”. This will block Facebot but still allow all other crawlers to index your page. If you want to block everyone, you would type “user-agent: *”. The star will block every crawler.

Wrapping Up

Ready to get started with robots.txt? Great!

If you have any questions or need help getting started, let us know!