Weekly Roundup of Breaking Marketing News Brought To You Each Friday

News 4/8/2024 to 4/12/2024

This week: Learn which types of posts get the most engagement on LinkedIn, uncover the most popular forms of influencer content, and dive into the newest API.

Here's what happened this week in the digital marketing news:

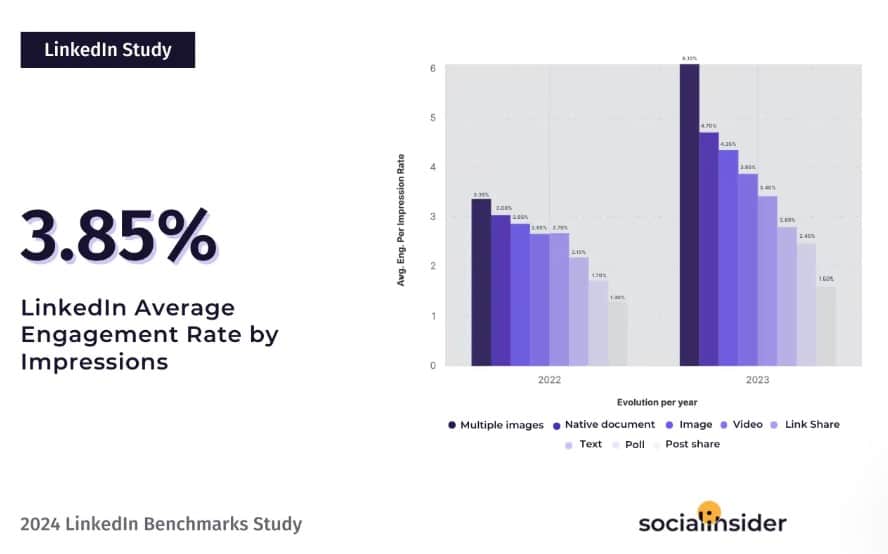

1. Which Types of Posts Get the Most Engagement on LinkedIn?

The folks at SocialInsider conducted a study to answer that very question.

Here are a few key findings from the research:

- Multi-image posts get the most likes and comments

- Videos get the most shares

- Polls generate the highest impression rate

- Multi-image posts with brief captions have the highest engagement rate

Social Insider also says that the overall engagement rate on LinkedIn increased 44% year-over-year.

The study looked at pages with a follower count between 1,000 and 1 million during the period ranging from January 2022 to December 2023.

LinkedIn Engagement Study

2. eMarketer Reports Most Popular Form of Influencer Content

What’s the most popular form of influencer content on social media?

If you guessed cooking, that’s correct.

According to eMarketer, cooking recipes are the most popular form at 51% and are preferred over product reviews, tutorials, and lifestyle content.

eMarketer Survey on Influencer Content

3. Is Video a Valuable Tool for B2B Marketers?

It would appear so.

LinkedIn released an infographic this past week highlighting the value of video in B2B marketing.

Here are some of the key takeaways:

- 59% of B2B marketers identified video as a leading marketing technique they intend to use this year

- 2 out 3 marketers say they’ll increase video use in the coming year

- Video facilitates authentic storytelling

LinkedIn also shared the various tools it provides that can help with video marketing.

Video is a Valuable Tool for B2B Marketing

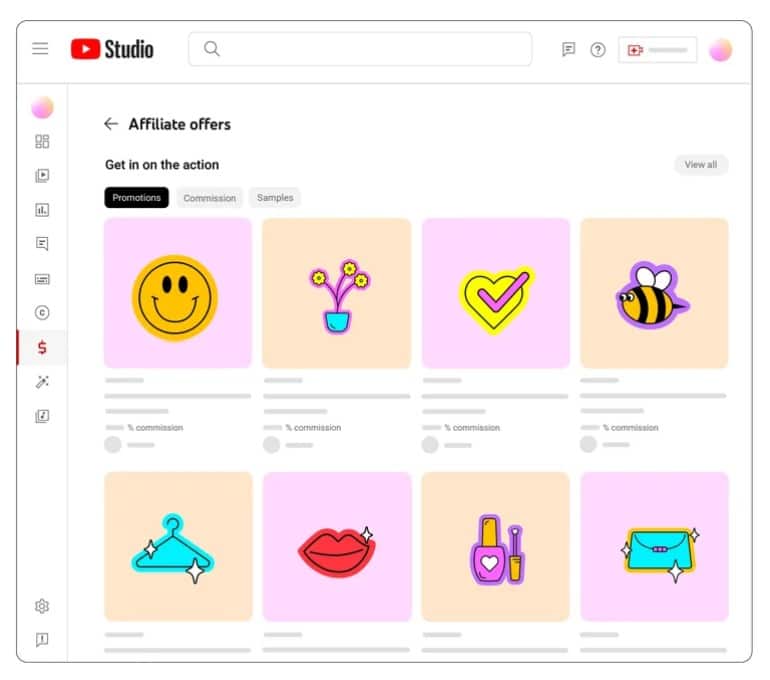

4. YouTube Shopping Updates Bring New Efficiency to Creators

Speaking of YouTube, the platform introduced new updates for shopping creators.

- Shopping Collections: Set up their own collections to show off their favorite products. They show up in your product list, store tab, and video description.

- Affiliate Hub: Access to the latest list of Shopping partners, competitive commission rates, promo codes, and the ability to request samples from top brands.

- Tagging: Tag products and merchandise across the entire video library at once

- Fourthwall: Makes it easier for creators to create and manage stories directly in YouTube Studio

YouTube - New Affiliate Hub

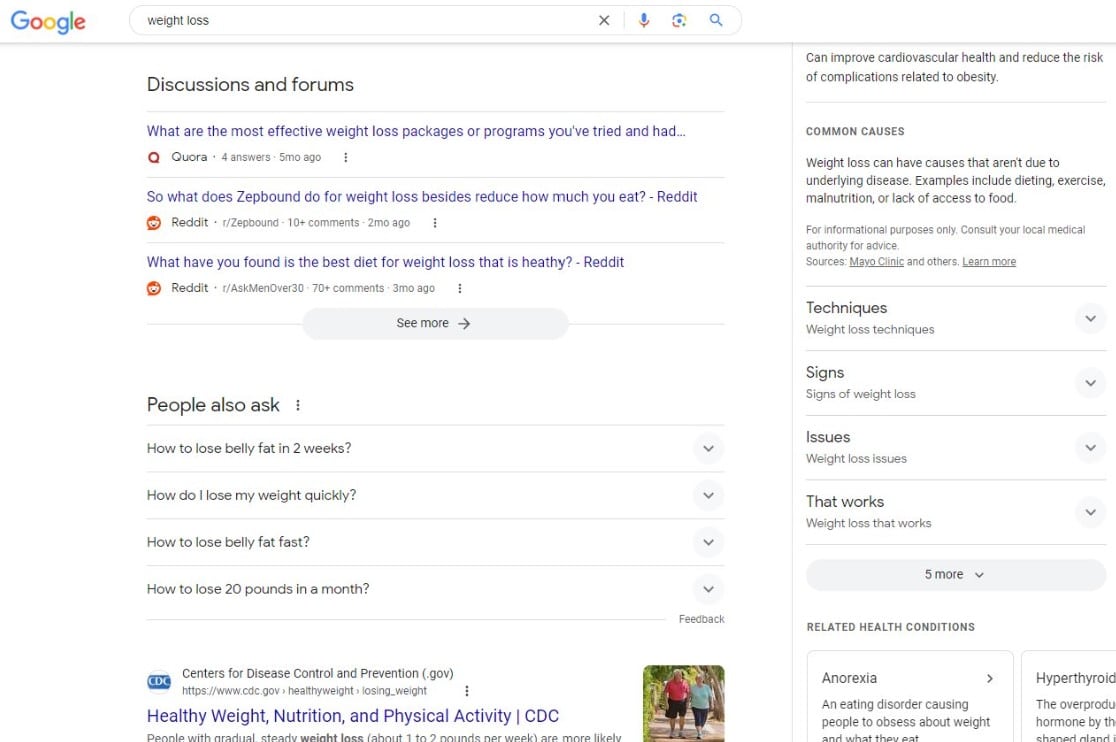

5. Why Does Google Show Reddit Search Results So Often?

That’s the essence of a question posed by Lily Ray this past week on X. She was particularly concerned about YMYL queries returning results with Reddit threads.

In response, Google Search Liaison Danny Sullivan answered by saying that the Reddit panel appears if the Google search algo determines that it’s “relevant and useful.”

Sullivan also said that, while SEOs might not appreciate the Reddit panel toward the top of the search results, searchers do appreciate it.

“They proactively seek it out,” he said. “It makes sense for us to be showing it to keep the search results relevant and satisfying for everyone.”

Sullivan also said that he’d pass Ray’s feedback to the search team.

Reddit Queries in Top Search Results

6. Should You Disallow Internal Footer Links?

Nope.

That’s according to John Mueller.

Recently on Reddit, somebody asked the following question: “Big site I'm working on and client has a handful of pages in their footer that have no backlinks, traffic, nor authority score. These pages however, according to client are important for some people there. Should I disallow them in Robots but keep them in footer?”

Mueller replied: “If you do this, future-you will be annoyed by the problems current-you is creating.”

In the past, Mueller has criticized the practice of making internal links nofollow.

“I think it’s a waste of time to do that,” he said.

SEO Questions About Internal Linking

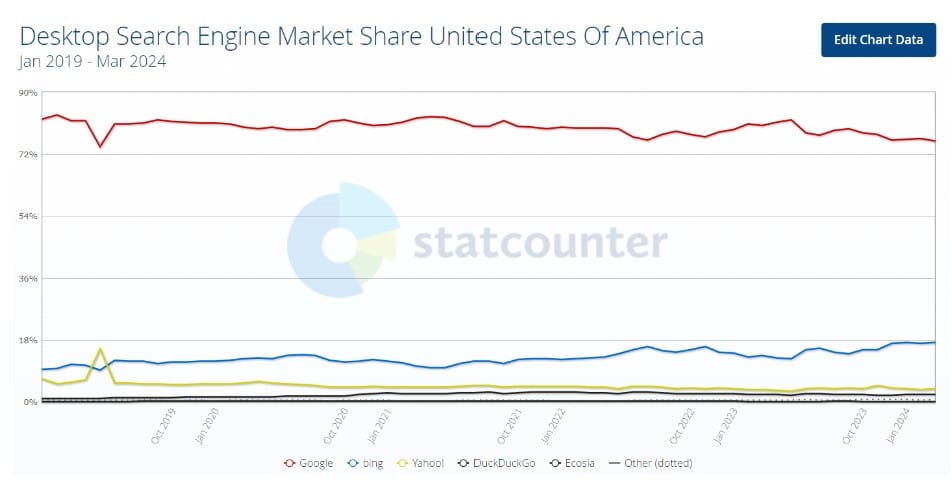

7. Are You Ignoring Bing to Your Peril?

So what’s the story with that “other” search engine these days?

According to Bing PM Fabrice Canel, “Bing usage extends beyond what many search engine optimizers (SEOs) may realize.”

He pointed to a Statcounter chart showing Bing steadily (although slowly) gaining market share since 2019.

“Additionally,” he said, “it’s important to remember that Bing powers several other search engines, including DuckDuckGo, Yahoo, Ask, Ecosia, Swisscows and more surfaces as Windows, Copilot and more. If you sum up all, the impact is even more significant.”

Search Engine Market Share via Desktop

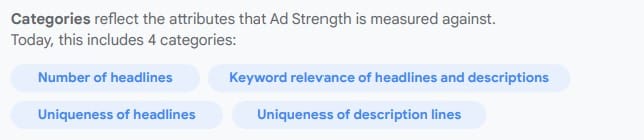

8. Does Google Use Ad Strength in the Ad Auction?

According to Google’s Ads Liaison Ginny Marvin, Google does not use ad strength in the ad auction.

The subject came up on X recently, with Marvin saying that ad strength “is a diagnostic tool that was developed to help advertisers understand how the diversity and relevancy of their creative assets can maximize the number of ad combinations that may show for a query.”

She went on to say that ad strength could explain the lack of impressions. But it would never prevent ads from entering into auctions.

Attributes that Affect Ad Strength

9. Who Is Snapchat Partnering With These Days?

This past week, Snapchat announced a series of new partnerships. The integrations will help you get the most out of advertising on the platform.

First up: Snap announced a partnership with Snowflake. That will enable you to “quickly implement Snap’s Conversions API (CAPI) signal solution without needing to build a bespoke back-end integration.”

Snapchat is also partnering with AppsFlyer. That’s an iOS-specific product that gives you insight via Mobile Measurement Partner (MMP) attribution.

Finally, Snap is updating its Event Quality Score (EQS). Use that to “understand signal health with greater precision.”

Homework

While the April showers are falling, you should have plenty of time to take care of these action items:

- Take a look at the TikTok guide on promoted posts to learn more about how to turn organic posts into ads.

- If you’re in the B2B space, put together a strategy for using videos on LinkedIn.

- And take a look at that study on which types of posts get the most engagement on LinkedIn. Make sure you’re creating that type of content.

- Take advantage of those new Snap partnerships to get more insight into your marketing on Snapchat.

News 4/1/2024 to 4/5/2024

This week: Find out where mobile advertising is growing the quickest, what new targeting features Meta is revealing, and how much time you should be focusing on backlinks.

Here's what happened this week in digital marketing.

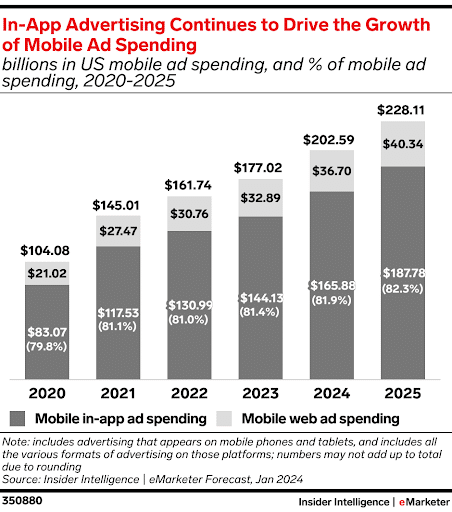

1. U.S. Mobile Advertising Is Growing Rapidly

US mobile ad spending will cross $200 billion this year, representing over half (51.2%) of total media dollars spent in the US and nearly two-thirds (66.0%) of digital ad dollars. The vast majority of mobile ad spending will take place in apps.

So, where is mobile advertising growing most quickly?

eMarketer In-App Advertising Drives Growth of Mobile Ad Spending

Apps will reach a dominant 81.9% share of mobile ad spending this year, but mobile web advertising also continues to add more dollars each year. Amazon accounted for 40% of ecommerce sales, and 4% of retail sales in 2023.

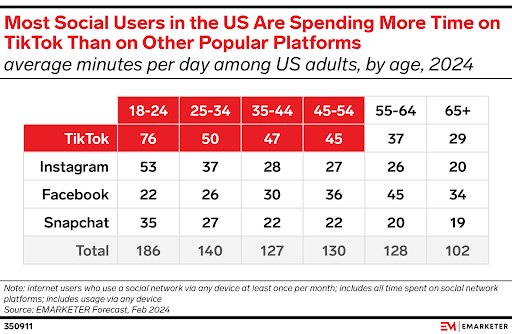

2. Gen Z Still Spends More Time on TikTok Than Any Other Age Group

According to eMarketer, time spent on social media among US adults who use social platforms will peak at an average of 1 hour and 50 minutes (1:50) next year (2025).

TikTok is still tops among most generations, but its growth is slowing—even among Gen Z. While it’s still incredibly popular, the app’s heyday could be coming to an end as it matures and deals with user gripes like its increased ad load and frustrations with TikTok Shop.

More Users Are Spending Time On TikTok

3. Meta's New Targeting Options Within Advantage+ Shopping Campaigns

No, this wasn’t an April Fool’s joke.

On Monday, Meta revealed new capabilities, allowing you to generate reporting breakdowns of your audience. Specifically reporting on those who have yet to convert.

New features include:

- Enhanced Audience Insights: Use it to define your engaged customers from the rest of your audience

- Demographics By Audience: Within the Reports breakdown, you’ll see separate rows for New Customers, Existing Customers, and Engaged Customers

When using Advantage+ Shopping Campaigns, advertisers turn over most targeting control to the platform.

These new tools will help marketers analyze how many sales come from new customers vs. current customers, and how many people are engaging with your brand without converting.

4. Do Google's 'Top Ads' Always Appear at the Top?

Not necessarily.

Google recently updated its ads help document. Here’s how it now reads: “Top ads are adjacent to the top organic search results. Top ads are generally above the top organic results, although top ads may show below the top organic results on certain queries. Placement of top ads is dynamic and may change based on the user’s search.”

The Google help page says the same thing.

So “top ads” might not be the correct phrase to describe them.

Google’s Ads Liaison, Ginny Marvin, says that the definition change doesn’t affect how performance metrics are calculated.

Top Ads - Quote from Google

5. YouTube Works to Provide Creators With Deeper, Valuable Insights

YouTube wants content creators to optimize their content more effectively. To do so, they need to understand what content their audience likes.

So, they’re rolling out three new updates:

- Live Stream Reaction Analytics: Creators can monitor viewers’ reactions in real-time, seeing data on the number and types of reactions from viewers

- Impression Breakdown: See the number of impressions segmented by new vs returning viewers for a clearer view of what content your audience likes

- HDR Live Streaming: Provide your viewers with higher-quality video. Think crispier whites and deeper blacks, and colors that pop

You’ll find the tool in YouTube Studio Analytics.

6. Are You Spending Too Much Time Focusing on Backlinks?

This past week on the SEO subreddit, somebody asked why Google Search Console shows a couple of backlinks for a web page whereas Ahrefs shows nothing.

Google’s John Mueller responded to that.

“There’s no objective way to count links on the web,” he said. He went on to say that every tool has its own method for collecting backlinks.

He also said that digital marketers “should not focus” on backlinks.

“There are more important things for websites nowadays, and over-focusing on links will often result in you wasting your time doing things that don't make your website better overall.”

7. Will Small Improvements in Core Web Vitals Boost Your Rank?

It doesn’t look that way.

In a recent Search Off the Record podcast, John Mueller said that obsessing over your yellow or even red Core Web Vitals scores won’t bear much fruit.

“I think a big issue is also that site owners sometimes over-fixate on the metrics themselves,” he said. “They see some number, and it’s like, ‘Oh my gosh, I have to get this to like some other number, some higher state.’ And then they spend months of time working on this. And they see this as they’re doing something for their Search rankings.”

Mueller went on to say that “incremental changes” probably won’t be visible in search.

8. How Can You Get Additional Insights From Reddit?

Reddit just announced a partnership with Cision. It’s an integration that enables Cision users to gather trend info from Reddit’s 100,000 active online communities.

“Cision provides a social suite that monitors public conversations about brands, topics, products, and campaigns,” Reddit said in a statement. “By accessing Reddit’s Data API, Cision can layer more rich and real-time insights into their suite of products. This will give customers a richer view of consumer preferences, empowering them to develop data-driven marketing and communication strategies faster and with greater accuracy.”

And Cision says it will use Reddit’s API to “supercharge” the development of AI-driven reputation and audience analysis tools.

9. How Is X Doing These Days?

According to a new report by Edison Research, Twitter/X usage dropped 30% in the past year.

The research shows that the number of U.S.-based X users fell from 77 million in 2023 to 55 million this year.

Other recent third-party reports also show a decline in X usage. None of them show a drop of 30%, though.

X, for its part, says that it has 250 million daily active users worldwide. That’s the same amount from November 2022.

10. When Is the Best Time to Make Changes for the Core Update?

Recently on Reddit, somebody asked if the March Core Update has finished rolling out yet and when to start making changes to his website in line with the new changes.

The website owner also said that the site lost 60% of its traffic already.

In response, John Mueller answered that no, the core update hasn’t finished rolling out yet. And he told the SEO to start making changes right away.

“Regardless, if you have noticed things that are worth improving on your site, I’d go ahead and get things done,” he said. “The idea is not to make changes just for search engines, right? Your users will be happy if you can make things better even if search engines haven’t updated their view of your site yet.”

Advice on Dealing with the March 2024 Core Update

11. Can You Use ChatGPT Without a Login?

You can now.

A recent announcement from OpenAI offers this good news: “Starting today, you can use ChatGPT instantly, without needing to sign-up.”

OpenAI also says that content shared in ChatGPT might be used for training its large language model (LLM). However, the company says you can turn that off via the Settings.

And then there’s this: “By sending a message, you agree to our Terms. Read our Privacy Policy. Don’t share sensitive info. Chats may be reviewed and used to train our models. Learn about your choices.”

Be careful out there.

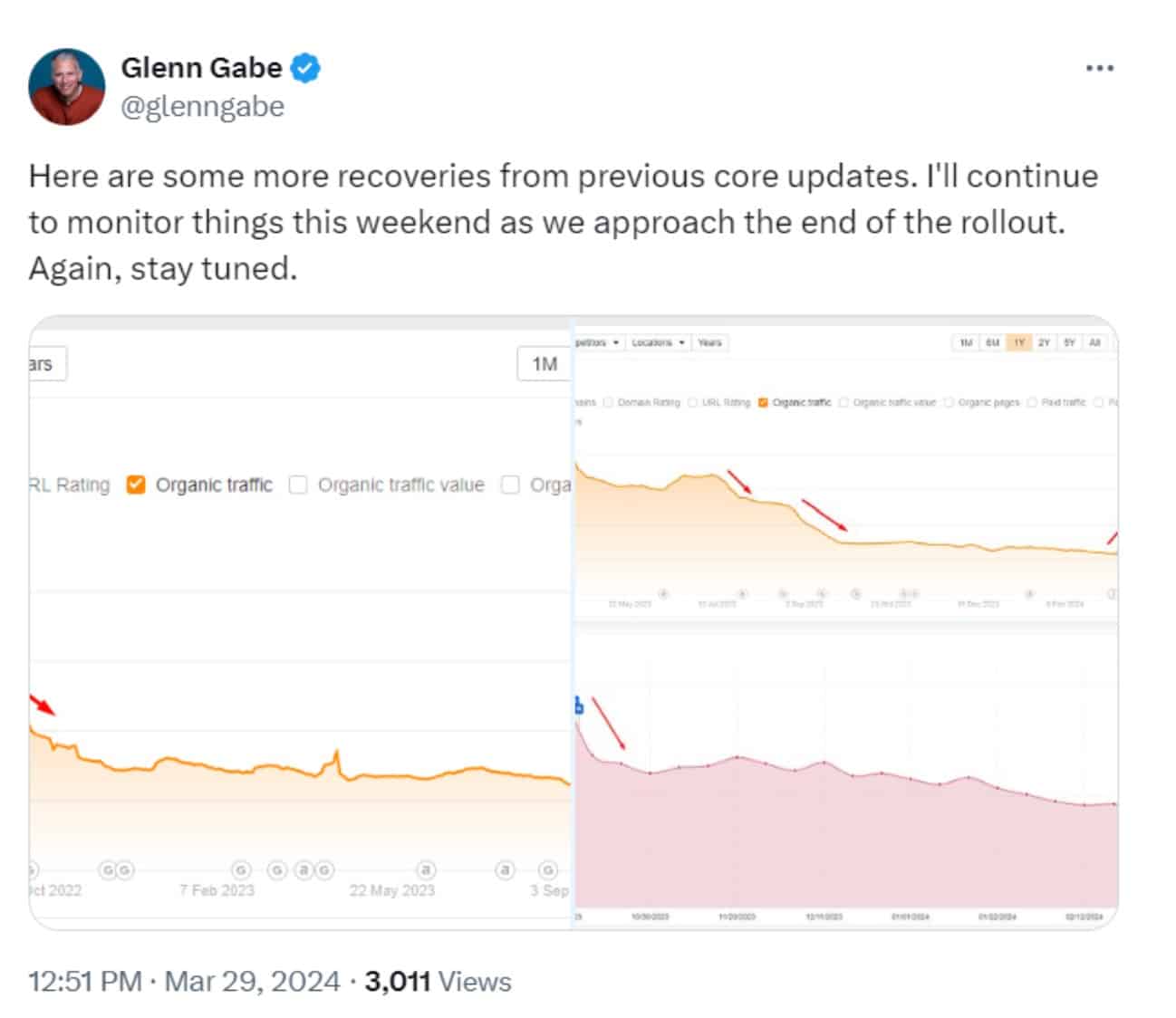

12. Are Websites Recovering From The Core Update?

I mentioned last week that no sites hit by the recent Helpful Content Update have recovered. But what about sites that got adversely impacted by the Core Update?

According to Glenn Gabe, some of them have bounced back. He posted a few screenshots on X this past week showing organic traffic recoveries.

Regarding the Helpful Content Update, though, he also posted this: “Checked the visibility of 360+ sites impacted by the Sep HCU(X) and none have bounced back at all still. Most are down even more.”

Looks like those folks are in trouble.

March 2024 Core Update Impacts

Homework

Before the NCAA basketball season comes to a close, take care of these action items:

- If you think your site got hit by either one of the recent updates, feel free to take action now. There’s no reason to wait any longer.

- If you’re into Reddit marketing, take a look at the Cision integration to gain more trend insights.

- Also, take a look at those new YouTube analytics to learn more about the people watching your videos.

News 3/25/2024 to 3/29/2024

This week: A Google study reveals SGE might be harming organic search traffic, new stats on sites affected by spam updates, and Google's got some tips on posting content.

Here's what happened this week in digital marketing.

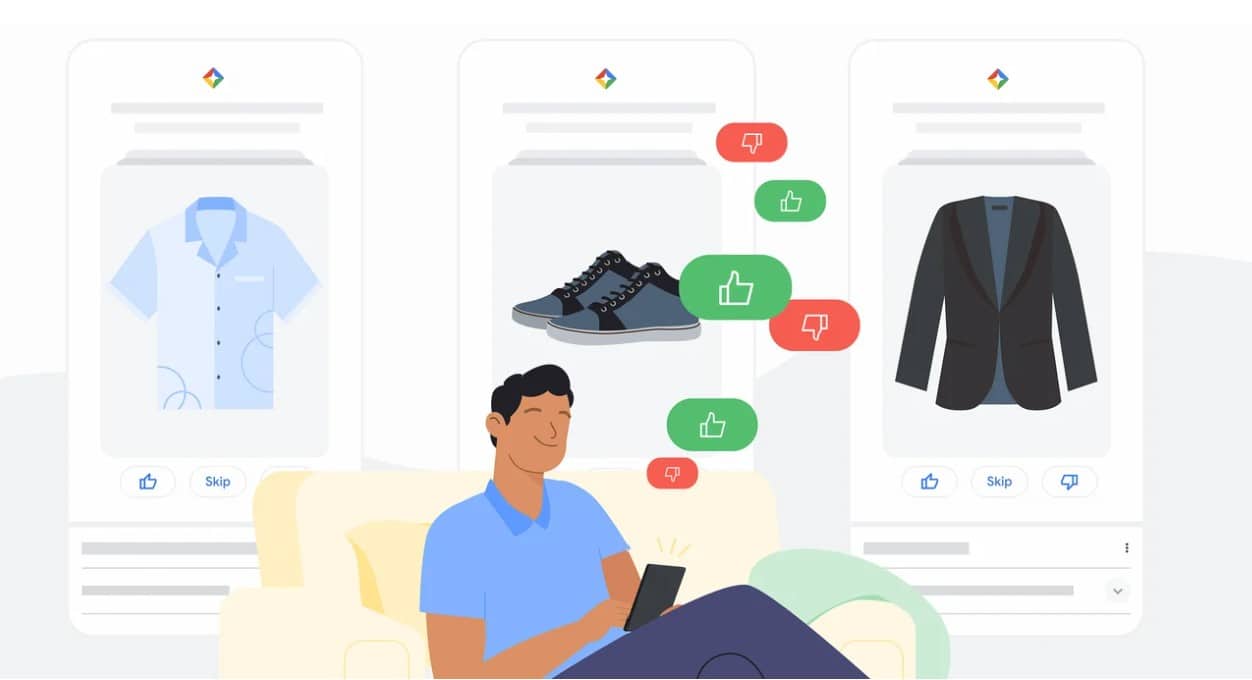

1. Google’s Testing New Generative AI Product Search Elements

The way you shop for clothes online is about to change… for the better.

Google’s trying new shopping elements to help users speed up their discovery process.

New features include:

- Related Style Recommendations: Upvote or downvote similar styles

- Virtual Try-On: See clothing on models ranging in sizes XXS-4XL

Google’s been testing out these features and soon they’ll be available to more users through its experimental shopping experience.

Google New Shopping Elements

2. Another Google Analytics Bug!

Earlier this week, Google confirmed a bug within Google Analytics.

The bug left users wondering about their real-time traffic.

Some users reported seeing zero traffic, while others reported everything was fun.

Lucky for you, the bug’s been fixed and all real-time traffic is accurate again.

Example of Reporting Showing No Real-Time Traffic

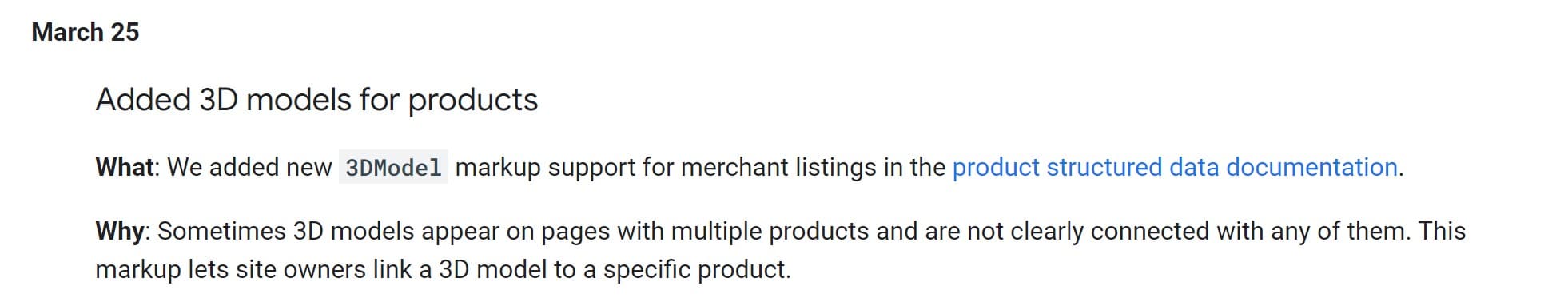

3. Google Introduces New Product Structured Data Type

Turns out Google’s into 3D.

The company added a new 3DModel markup type to its documentation.

Could a 3D product display rich result be on the way?

Google says 3DModels are increasingly used on product webpages, so this will give merchants a new way to add information.

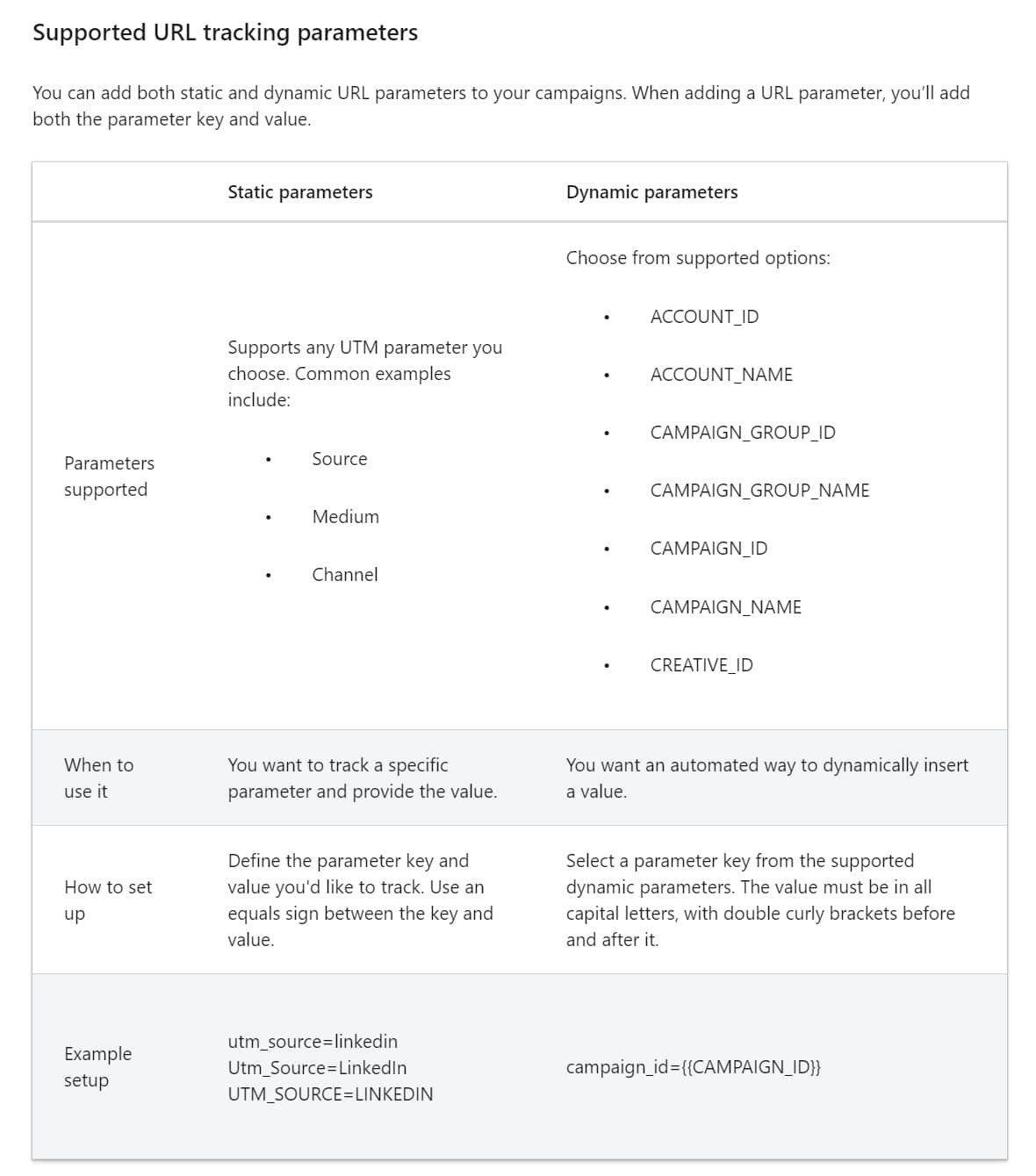

4. LinkedIn Ads Releases New Campaign Tracking Tool: Dynamic UTMs

LinkedIn marketers listen up!

The platform is launching its new solution to campaign monitoring that does not involve third-party cookies.

Dynamic UTMs, which will be fully rolled out by the end of March, eliminate the need to manually set up UTM parameters.

Why is this a big deal?

Manually setting up UTM parameters is time-consuming, inefficient, and usually results in errors.

So LinkedIn is trying to combat these issues.

LinkedIn URL Tracking Parameters

5. Google’s Study Reveals SGE Might Harm Brand Visibility

The study looks at brand visibility and organic search traffic. Here are the findings:

- When an SGE box is expanded, the top organic result drops by over 1,200 pixels on average, significantly reducing visibility.

- 62% of SGE links come from domains outside the top 10 organic results.

- Ecommerce, electronics, and fashion-related searches saw the greatest disruption, though all verticals were somewhat impacted.

We’ll see what proves true as Google continues to push its Search Generative Experience.

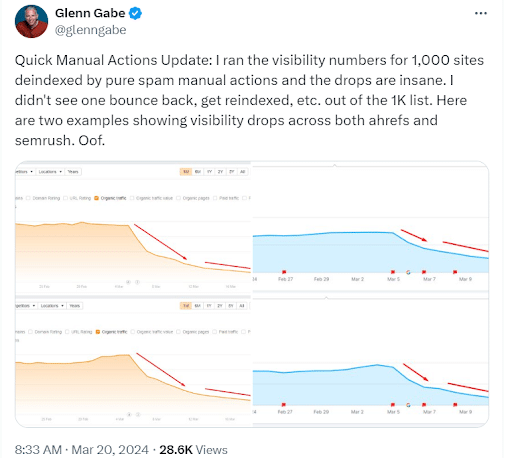

6. How Many of the Sites Affected by the Spam Update Are Recovering?

It looks like Google’s judgment on spammy sites is final.

Glenn Gabe shared some insights about a thousand sites that got deindexed by Google during the March spam update. He didn’t see one bounce back.

Gabe posted charts showing a couple of examples. The charts came from both Ahrefs and SEMRush.

In a subsequent post on X, Gabe said that some legitimate sites are also getting hit by the spam update. He did not address those in this analysis, though.

Sites Affected By Spam Update

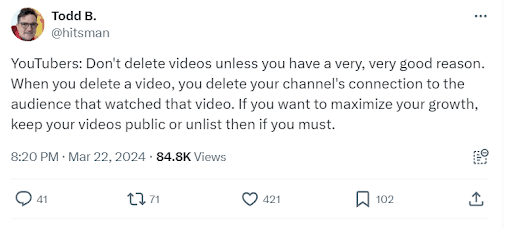

7. Should You Delete YouTube Videos?

Nope.

That’s the message from YouTube’s homepage product lead.

“YouTubers: Don't delete videos unless you have a very, very good reason,” he said. “When you delete a video, you delete your channel's connection to the audience that watched that video. If you want to maximize your growth, keep your videos public or unlist then if you must.”

YouTube’s creative liaison, Rene Ritchie, reposted that message on X. So the advice comes from a couple of different YouTube sources.

Don't Delete Your YouTube Videos

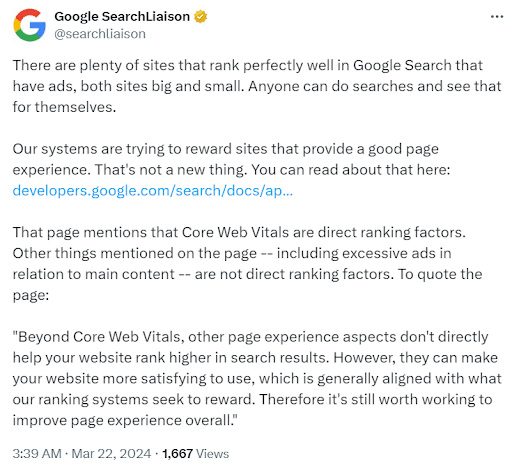

8. Do Ads Prevent Your Site From Getting a Good Rank?

Not at all, according to Google Search Liaison Danny Sullivan.

This past week, somebody took to X to suggest that sites with ads will get pushed down in the search engine results pages (SERPs).

Sullivan responded to that as follows: “There are plenty of sites that rank perfectly well in Google Search that have ads, both sites big and small. Anyone can do searches and see that for themselves.”

He went on to say that Google rewards pages that offer a good user experience.

Then he quoted from Google’s own documentation: “Beyond Core Web Vitals, other page experience aspects don't directly help your website rank higher in search results. However, they can make your website more satisfying to use, which is generally aligned with what our ranking systems seek to reward. Therefore it's still worth working to improve page experience overall.”

Google Systems Reward Sites with Good Page Experience

9. What Advice Does Google Give to People Who Got Affected by the Helpful Content Update?

Recently on X, Lee Funke pointed out that her website got hit by the recent Helpful Content Update and asked Google’s Search Liaison for some advice.

In response, Danny Sullivan referred her to a recent blog post. Specifically, this part: “Just as we use multiple systems to identify reliable information, we have enhanced our core ranking systems to show more helpful results using a variety of innovative signals and approaches. There's no longer one signal or system used to do this, and we've also added a new FAQ page to help explain this change.”

He went on to say that the recent changes look more closely at pages rather than entire sites.

Sullivan also pointed out that ranking can drop for a variety of reasons, including that Google simply determines that other content is more helpful.

10. What Does Google Really Want You to Show on Your Website?

This past week on X, somebody suggested a novel SEO idea: add a shopping cart to your website.

Another poster replied that she might do the same, because it “shows Google” that her site does more than just affiliate/review content.

Danny Sullivan responded to that as follows: “I wouldn't recommend people start adding carts because it ‘shows Google’ any more than I would recommend anyone do anything they think "shows Google" something.”

He went on to say that you should focus on creating content that’s designed for visitors, not Google.

11. Does Mass Publishing Convince Google That You Have Quality Content?

No.

As you might recall, last week I mentioned that Google will crawl quality content more often.

Some SEOs apparently got it in their heads that if they publish more content, Google will consider it high quality.

Google’s John Mueller addressed that on LinkedIn: “I see some folks try to turn this around, pushing their content to be crawled more frequently, so that Google will think it's good. IT DOES NOT WORK THAT WAY, IT DOES NOT MAKE ANY SENSE. HELLO. Your kids won't start loving kale if you force it down their throats in the same way they stuff ice cream in their faces.”

He went on to say that you should “make truly awesome things.”

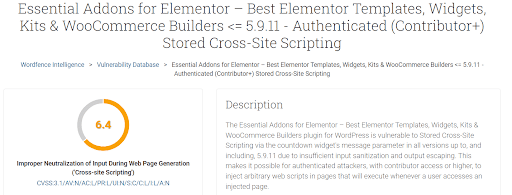

12. Is Your Site Affected by This WordPress Plugin Vulnerability?

If you’ve got a WordPress website and the Essential Addons for Elementor plugin, your site could be open to attack.

According to a security bulletin from Wordfence, the plugin includes a store cross-site scripting vulnerability. That means a hacker can upload a malicious script that affects visitors.

Fortunately, the problem is fixed in v5.9.13 of the plugin.

WordPress Vulnerability

13. What’s the Latest Media Type Available in Structured Data?

It’s 3D model markup.

Google just announced the new media type on its Search Central “What’s New” section.

It’s designed for use with ecommerce sites. Some sites display 3D models alongside product descriptions to give shoppers more info about the item offered for sale.

The whole point of the new addition is to make it clear that the 3D model is associated with a specific product.

In the past, 3D models appeared on pages with multiple products. It wasn’t always clear which product related to the model.

3D Model Markup

14. What’s This Week’s TikTok Guide?

Another week, another guide from TikTok.

This time it’s about lead generation. And TikTok released it in partnership with Hubspot.

It’s got tips on:

- Lead gen forms

- Hooks

- Messaging

- Calls to action

The guide also advertises a limited-time discount for Lead Gen Ads. You can get a $1,500 ad credit for a $1,000 investment.

TikTok & HubSpot Lead Generation Playbook

Homework

Take some time away from crying over your broken March Madness bracket to handle these action items:

- If you use 3D models on your ecommerce site, take advantage of that new markup.

- If you’re using that Essential Addons for Elementor plugin, be sure to upgrade to the latest version of the plugin.

- Avoid deleting your old YouTube videos.

- Check out that latest TikTok guide on lead generation.

News 3/18/2024 to 3/22/2024

This week: Google's spam update is complete, TikTok rolls out a new program for Creators, and LinkedIn introduces a way to sponsor organic posts.

Here's what happened this week in digital marketing.

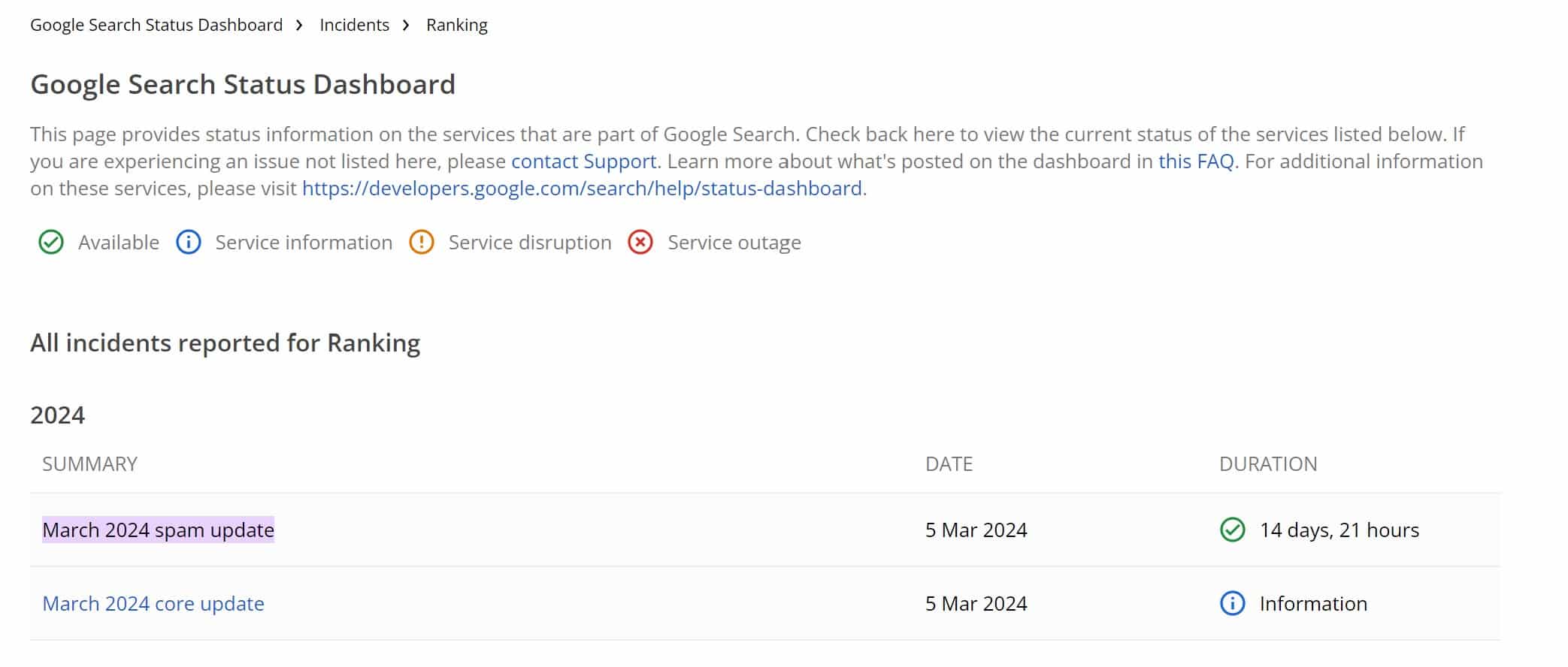

1. Google March 2024 Spam Update is Complete

Although the March 2024 core update is still happening, Google announced earlier this week that the March 2024 spam update rollout was completed.

Google introduced three new spam policies

- Scaled content abuse

- Expired domain abuse

- Site reputation abuse

The first two went into effect immediately following the update. The site reputation abuse will not go into effect until May to give users the chance to clean up their act.

Google is working hard to combat spam and create the best user experience possible.

Google Search Status Dashboard

2. Do High-Quality Web Pages Get Crawled More Often?

Yep. That’s according to Google’s Gary Illyes. In a recent podcast, Illyes gave some insight into the crawl budget concept.

He said that Google crawls web pages based on a couple of algorithms.

The first one is the scheduler. That tells the bot which pages to crawl.

But then there’s something else. It’s based on “feedback from search.”

“If search demand goes down, then that also correlates to the crawl limit going down,” Illyes said.

So pages that have high “search demand” (that is, high-quality pages) get crawled more often.

Search Off the Record Podcast

3. What’s the Latest With Advertising on Reddit?

It’s free-form ads. They look just like user-generated content on the platform.

Think of them as native ads for Reddit.

Reddit recently announced the new ad type in a blog post. The company calls it “one of the best formats to launch a product, introduce a brand to a new audience or share a seasonal shopping guide.”

Unsurprisingly, the new format also enables you to engage with people in your target audience on Reddit. You can turn your ad into what Redditors call a megathread.

Also, you can use free-form ads with multiple media types.

4. How Much Is SGE Costing Publishers?

About $2 billion. That’s according to a study published by Raptive.

Why? Because AI could trigger a decline of as much as 60% in search traffic. That means fewer clicks to your landing pages.

Raptive conducted the study by comparing Google’s current results with search generative experience (SGE) results for 1,000 of the company’s most popular keywords.

The research showed that some keywords showed no SGE results. However, others showed results that included links to Raptive websites.

Raptive calculated the loss based on those results. For its 5,000-site portfolio, the loss amounted to a 25% drop in search traffic.

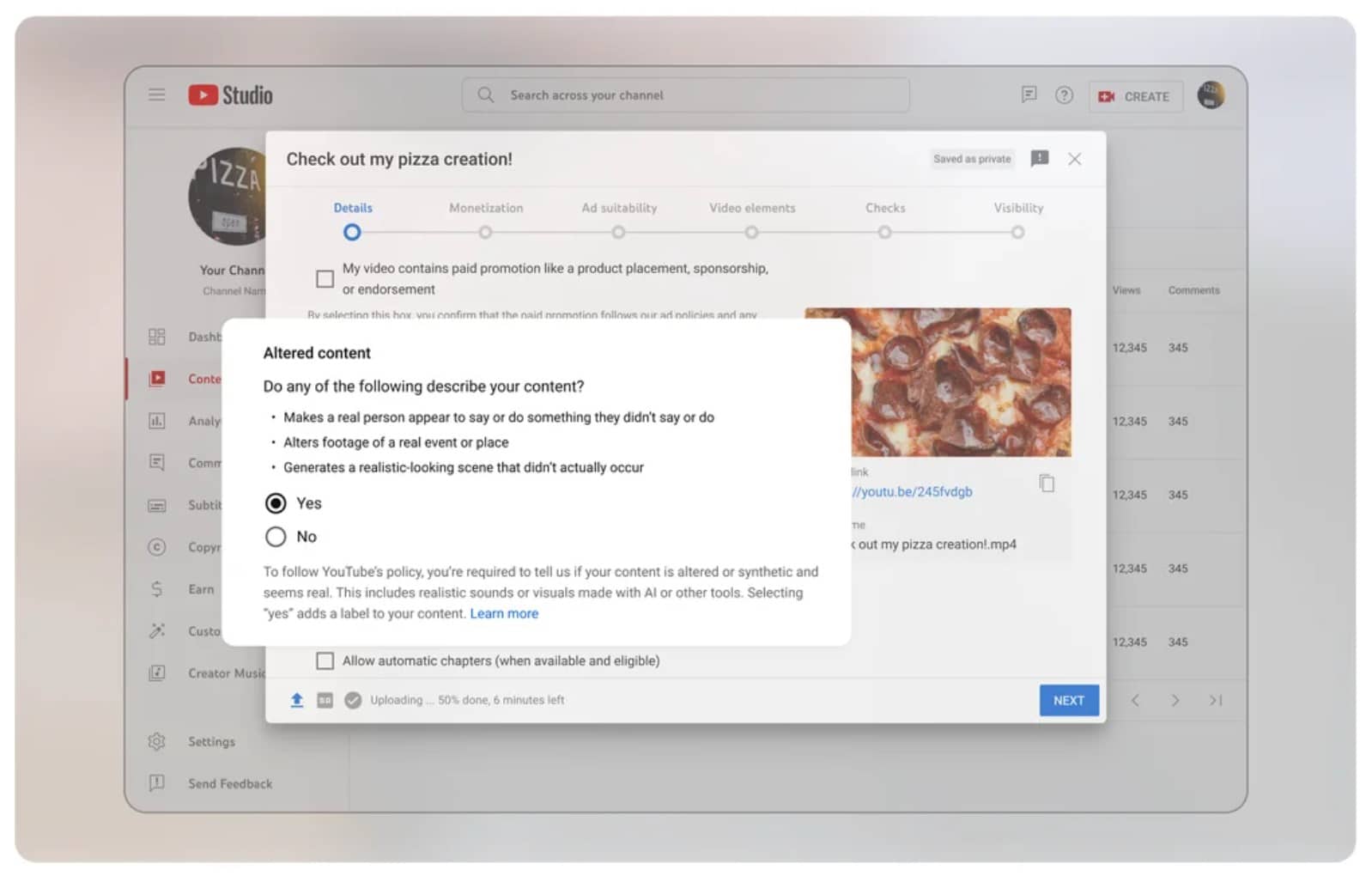

5. How Is YouTube Handling AI Content?

According to a new announcement by the YouTube team, you’ll need to disclose AI-generated content in your videos.

A new UI feature in Creator Studio displays a checkbox with this text: “is altered or synthetic and seems real.” You have to check that box if your video includes content generated with the assistance of an AI tool.

YouTube will let viewers know that it’s not real footage.

However, YouTube says that AI-generated scripts and other production elements don’t require disclosure.

YouTube Requires Creators to Disclose Altered Content

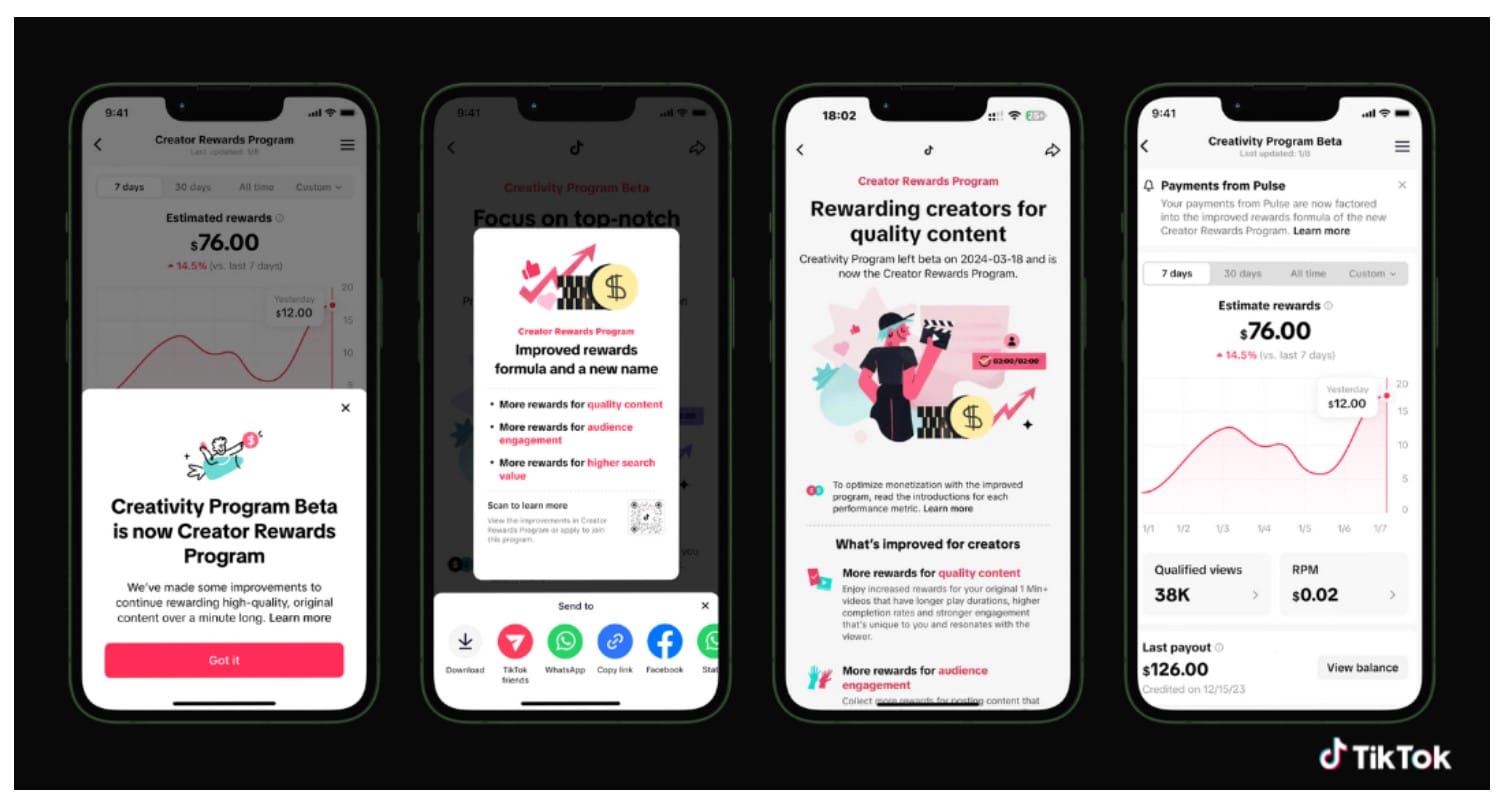

6. How Is TikTok Rewarding Successful Publishers?

TikTok just rolled out a Creator Rewards Program. It’s designed to reward successful publishers on the platform.

In the announcement, TikTok says that content creators will get rewarded based on four metrics:

- Originality

- Play duration

- Search value

- Audience engagement

Also, TikTok launched a Creator Search Insights tool. It gives you stats on trending search topics.

Use that tool to beef up the “search value” metric in your content.

New TikTok Creator Rewards Program

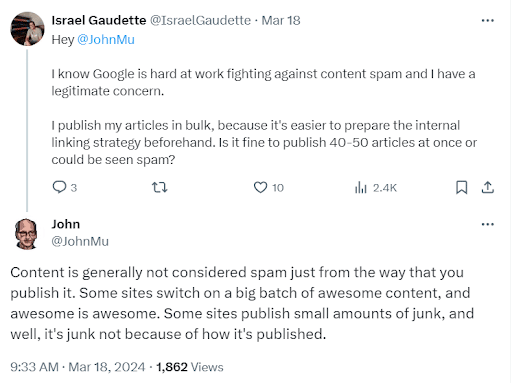

7. Does Google Consider Content Published in Bulk As Spam?

Nope. And that’s good news if you crank out lots of content on a scheduled basis.

The subject came up this past week on X. Somebody asked Google’s John Mueller if publishing articles in bulk triggers a spam alert to Googlebot.

“Content is generally not considered spam just from the way that you publish it,” Mueller replied. “Some sites switch on a big batch of awesome content, and awesome is awesome. Some sites publish small amounts of junk, and well, it's junk not because of how it's published.”

8. Is the Maximum Length for Instagram Reels Going to Change?

It looks that way. According to a Threads post by Justin Jarvis, some Instagram users are seeing a popup informing them that they can create Reels up to 3 minutes in length.

The previous limit was 90 seconds.

Meta recently reported that Reels views are up 20% year-over-year.

Instagram Reel Length is Changing

9. What Are the Latest Stats for X Usage?

According to a series of posts on X, 250 million people use the platform every day.

Here are a few other key stats:

- On average, users spend 30 minutes a day on X

- Daily average user time spent on X has grown 13% YoY

- Mobile time spent increased 17% in the last 6 months

- X sees more than 8 billion daily active user minutes on average

- DAU minutes have increased 10% YoY

- 1.7 million people join X every day

X uses those stats to claim its platform is “indispensable.”

10. How Would You Like to Sponsor Organic Posts on LinkedIn?

LinkedIn just announced that you can now promote content from any user via Thought Leader ads.

In the past, you could only promote posts from verified employees.

According to LinkedIn, 73% of decision-makers say that a company’s thought-leadership content is more trustworthy than marketing materials when it comes to assessing that company’s core competencies.

You can set up Thought Leader ads from Campaign Manager.

Homework

Spring has sprung. But before you check out those blooming flowers, take care of these action items:

- If you’re in the B2B space, think about using Thought Leader ads. You might reach people you’d otherwise miss.

- If you’re using AI to generate video content, be sure to check the appropriate box on YouTube.

- Think about using Reddit “native ads” on a subreddit consisting of people in your target market.

- Think about how you can use longer Instagram Reels to boost your brand.

News 3/11/2024 to 3/15/2024

A ban on TikTok might be coming, there's a new Core Web Vitals update, and TikTok is celebrating International Women's Month. Here's what happened this week in digital marketing news.

1. TikTok Might Be Banned

The US House of Representatives voted Wednesday to pass a bill that could lead to a nationwide ban against TikTok, one of the world's most popular social media apps. It’s not yet clear what the future of the bill will be in the Senate.

Here's what you need to know about the vote and what may happen next:

- Who Voted for the Bill: The House vote was 352 to 65, with 50 Democrats and 15 Republicans voting in opposition. In a rare show of bipartisanship, the measure advanced unanimously out of the powerful House Energy and Commerce Committee, and President Joe Biden has said he would sign the bill if it makes it to his desk.

- Why the Bill Passed: Lawmakers supportive of the bill have argued TikTok poses a national security threat because the Chinese government could use its intelligence laws against TikTok's Chinese parent company, ByteDance, forcing it to hand over the data of US app users.

- What the Legislation Would Do: The bill would prohibit TikTok from US app stores unless the social media platform — used by roughly 170 million Americans — is spun off from ByteDance. The bill would give ByteDance roughly five months to sell TikTok. If not divested by that time, it would be illegal for app store operators such as Apple and Google to make it available for download.

- Uncertain Future in the Senate: Senate Majority Leader Chuck Schumer remained uncommitted Wednesday to the next steps in the Senate, just saying that the chamber will review the legislation. Senate Intelligence Committee Chairman Mark Warner, a Virginia Democrat, and the panel’s top Republican, Marco Rubio of Florida, urged support for the House bill, citing the strong showing in Wednesday’s vote. Democratic Sen. Maria Cantwell, the chair of the Senate Commerce, Science and Transportation Committee, wants to create a durable process that could apply to foreign entities beyond TikTok that might pose national security risks.

- What TikTok is Saying: TikTok has called the legislation an attack on the constitutional right to freedom of expression for its users. China’s foreign ministry called the bill an “act of bullying.” In a video posted on X (formerly Twitter), CEO Shou Chew thanked the community of TikTok users, and said the company has invested in keeping user "data safe and our platform free from outside manipulation." He warned that if the bill is signed into law, it will impact hundreds of thousands of American jobs and take "billions of dollars out of the pockets of creators and small businesses."

- Opposition to Banning TikTok: Former President Donald Trump, who was once a proponent of banning the platform, has since equivocated on his position, while Democrats are facing pressure from young progressives among whom TikTok remains a preferred social media platform.

- Potential Antitrust Issues: The market for social media services is highly concentrated, which could make it hard for TikTok to even find a buyer that US competition regulators could accept, antitrust experts say.

2. Access to Consumer Data is More Valuable Than Ever

With third-party cookies on the way out, access to customer data is more valuable to brands than ever.

Over half (59%) of US digital retailers say that gathering relevant customer data to deliver a more personalized experience is a primary concern or challenge for them in 2024, per a December 2023 survey from Bolt.

In addition, 37% of US brands and agencies say their greatest concern or challenge for media investments in 2024 is having enough first-party data for targeting, activation, etc., per a November 2023 report from the Interactive Advertising Bureau.

By leveraging loyalty programs, brands can collect first- and zero-party data.

3. What’s the Latest With Core Web Vitals?

This week, Google officially replaced one of its Core Web Vitals metrics.

As of Tuesday, March 12, First Input Delay (FID) gave way to Interaction to Next Paint (INP).

If you’re unfamiliar with INP, it measures a web page’s responsiveness. It checks the latency associated with user interactions.

Specifically, it improves the measurement of how quickly your website reacts to user interactions including:

- Clicks on interactive elements

- Taps on interactive elements on touchscreen devices

- The press of a key on a physical or onscreen keyboard

This update, by the way, has nothing to do with the ongoing core algorithm change.

4. What’s the Latest With That Google Algo Change?

The Google core algorithm update is still rolling out. So it’s difficult to assess the full impact as of now.

However, alongside that algorithm update, Google started dishing out manual actions against spammy websites.

Barry Schwartz aggregated the X posts of several webmasters who noticed that some of their low-quality sites got completely de-indexed.

“I’m seeing AI spam sites getting fully deindexed left and right right now,” said Gael Breton, who runs the Authority Hacker website.

Another strategist, who said he monitors a collection of “horrible spam sites,” noticed that most of them had been completely delisted.

5. Let’s Clarify: What’s With the Simultaneous Updates?

So, as I noted above, the Google Core Update and the Google Spam Update went out simultaneously. That’s leading some SEOs to ask: “Why would Google release two updates at once?”

But that question really stems from a false premise: manual actions aren’t an algorithmic update.

So says Google’s Search Liaison Danny Sullivan: “We do generally try to avoid [overlapping updates], but we had both the core update and the spam update ready, and ultimately, we're going to push updates we think will improve the quality of search results. Manual actions aren't an update. New spam policies aren't an algorithmic update. So those aren't ‘updates’ that ‘overlap’ with the core and spam updates.”

Lots of folks still remember 2022 when the Helpful Content update rolled out at the same time as the Core update. Google received lots of complaints at that time.

6. Does Google Think This Is a Great Time to Double-Down on AI Content?

No.

Over on Reddit, an SEO pointed out that he’s getting hit by the latest core update. He wants to know how others are dealing with it.

One commenter replied that he’s just going to post more content to make up for the lost traffic. But he’ll post that additional content with the assistance of AI.

“I hope you're making a joke, because this is a bad idea,” replied Google’s John Mueller. “Instead of digging deeper, I'd look around for ways to build something up. Maybe that means discarding the site and building up something for the long run.”

7. Does Google Use Core Web Vitals in Its Ranking Systems?

Yep.

That’s the latest after months, if not years, of confusion about the matter. You can read all about it in Google’s “Understanding page experience” document.

In the past, Google included this cryptic paragraph in that doc:

There are many aspects to page experience, including some listed on this page. While not all aspects may be directly used to inform ranking, they do generally align with success in search ranking and are worth attention.

That’s been replaced with this:

Core Web Vitals are used by our ranking systems. We recommend site owners achieve good Core Web Vitals for success with Search and to ensure a great user experience generally.

So yeah, pay attention to your Core Web Vitals scores.

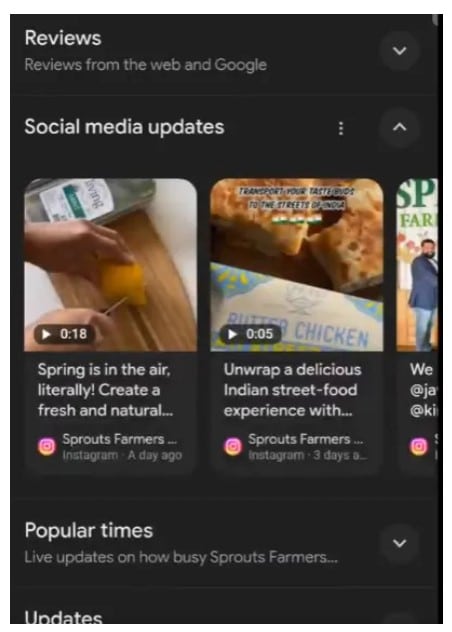

8. Social Media Posts Are Added to Google Business Listings

Google added a new section within its business listings in Search, showcasing recent social media posts from connected profiles.

Business owners should connect the following accounts:

- YouTube

- TikTok

- And X

These previews will auto-populate and will help generate more interest in your products or services.

If your Google Business listings aren’t up to date, add your social profile links in to take full advantage of traffic.

9. Meta is Updating Advantage+ and Shopping Ads

Ready to improve your engagement, drive more conversions, and deliver personalized ads?

Meta wants to help you give consumers “the confidence to make a purchase after seeing an ad.” by bringing new updates to Advantage+ Shopping Ads.

Here’s how they’re doing it:

- Advantage+ Creative Optimizations: Automatically optimized video ads to fit a 9:16 ratio and generate multiple ad variations dynamically

- Advantage+ Catalog Ads Update: Include branded videos or customer interactions, not just static images

- Hero Images in Catalog Ads: Upload a hero image and Meta’s AI will show people the best products from their catalog to drive performance

- Ecommerce Ad Options: Expansion of the Magento and Salesforce Commerece Cloud integrate

- Reminder Ads: Incorporate external links to new products or sales

- Promo Codes: Alphanumeric promo codes to reduce cost per purchase and increase conversions

10. What New Tools Is Reddit Offering to Marketers?

This past week, Reddit announced a new toolkit designed to help businesses reach more customers on its platform.

The toolkit is called Reddit Pro. And, in spite of that “Pro” bit added to its name, the toolkit is offered free of charge.

Here’s what Reddit Pro brings to the table:

- AI-powered insights

- Performance Analytics

- Publishing tools

- A dashboard

You can also use Reddit Pro to promote organic posts as paid ads.

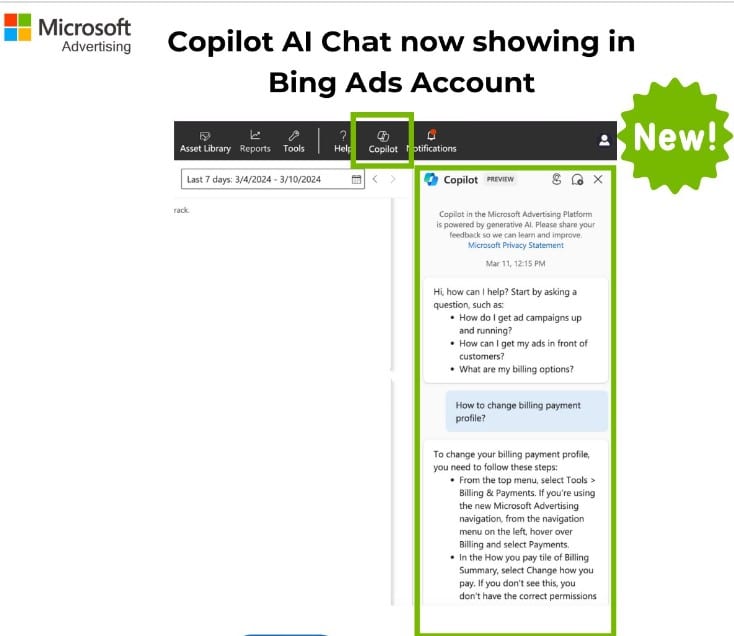

11. What’s the Latest With Microsoft Ads and Copilot?

Microsoft Ads quietly extended Copilot integration to more advertisers.

Thomas Eccel made the discovery recently and posted about it on X.

Search Engine Land reached out to Microsoft for more info. The company confirmed that more people now have access.

If you’re unfamiliar with Copilot, it’s a tool that uses AI to offer suggestions related to improving your ads. It can recommend images, headlines, and descriptions.

12. Are You Wasting Ad Dollars on ‘Made for Advertising’ Sites?

According to a new report by Adalytics, thousands of companies run ads on Made for Advertising (MFA) websites.

MFA websites generally offer little in terms of user experience or helpful content. They’re designed solely to line the pockets of the website owners.

According to Adalytics, brands can have their ads placed on MFA sites via the following supply-side platforms (SSPs):

- Criteo

- Smart AdServer

- OpenWeb

And the following demand-side platforms (DSPs) interacted with MFA inventory:

- Roku OneView

- Yahoo DSP

- Google DV360

Be careful out there.

13. What’s the Latest Guide From TikTok?

This time it’s a guide on how to reach women.

Undoubtedly, TikTok released the 15-page guide in connection with International Women’s Day (March 8).

As is the case with other TikTok guides, it’s packed with stats and facts to help you take your TikTok marketing to the next level. Assuming that women are part of your target market, of course.

TikTok also shared three key themes that businesses should consider when trying to reach women:

- How women are connecting on TikTok

- How women use TikTok to learn more about themselves

- What authenticity looks like to today’s women

The guide offers plenty of details on each of those three themes.

Homework

Before you enjoy that Guinness for St. Patrick’s Day, take care of these action items:

- Check to see if your ads are running on MFA websites. Take corrective action if necessary.

- Consider using Copilot with one of your Microsoft Ads campaigns and follow the recommendations. You might see better engagement.

- If you market your business on Reddit, take a look at Reddit Pro. It’s free.

- Keep an eye on your analytics to see how the latest Google updates are affecting your rankings.

- Take a look at that TikTok guide about marketing to women.