Google just turned 20, and it celebrated with a slew of new announcements.

All of them had one thing in common: a clear emphasis on AI.

Here’s a rundown of what you can expect – and how you can prepare – for Google AI.

Google AI: Neural Matching

Google recently announced they’ve started using a “neural matching” algorithm to better understand overall concepts and search queries.

And it’s kind of a big deal.

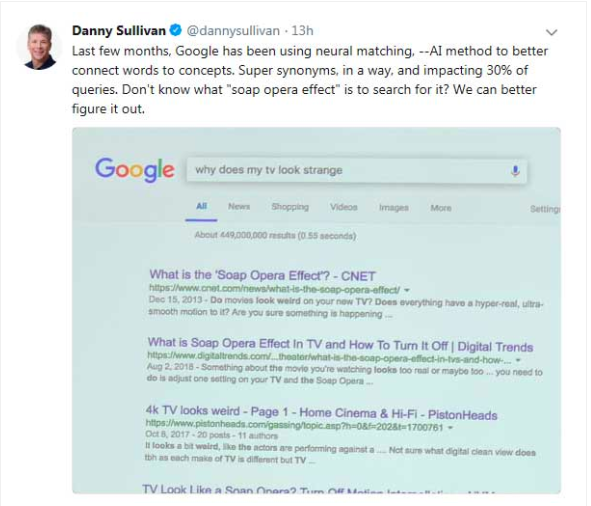

According to a Tweet by Google’s Danny Sullivan, this neural matching algorithm is affecting 30% of searches.

Sullivan explains that this AI algorithm is used to better connect words to concepts.

Which basically means Google’s gotten a lot better at understanding synonyms (or as Sullivan calls them, “super synonyms”).

For example, ever heard of something called the “soap opera effect?”

Yeah, I hadn’t either.

Apparently, it’s when your TV looks a bit strange – a concept I’m far more familiar with.

So “why does my TV look strange” is something I would likely type into Google.

And Google – using its neural matching algorithm – would return results featuring the “soap opera effect” – even though those words weren’t in my original query.

Google AI is using neural matching

Kinda cool, right?

The algorithm is intended to help Google better figure out what users are searching for. So even if I don’t know that what I’m experiencing is actually called the “soap opera effect,” Google does, and it can point me in the direction of more relevant answers because of it.

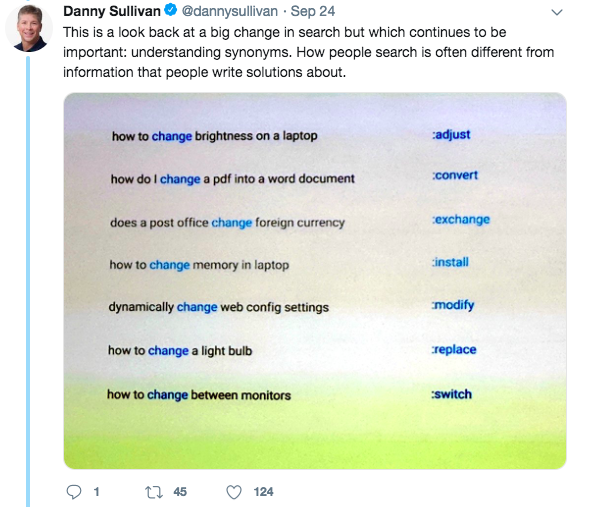

Which brings us to Sullivan’s follow-up point: “how people search is often different from information people write solutions about.”

Here’s another image he attached:

Google AI uses neural matching to identify synonyms in text

What’s so cool about this is that the algorithm is able to not only identify synonyms, but those most commonly used in certain contexts.

Each possible query with “change” returns a different synonym, based on what’s most natural in real conversation.

That’s why the ability to understand synonyms – or closely connected concepts – is so important to Google.

Google’s made it no secret that user experience is a top priority, and this new AI ability is a big step in helping the search engine decipher the underlying meaning of queries and return more accurate results.

And with the move towards more voice-generated searches, the importance of both identifying and using natural language is more important than ever.

Google AI, Neural Matching and Deep Relevance Ranking

Google’s never exactly been straightforward with its algorithms.

So while we don’t know for sure how the new(er) neural matching algorithm works, we can get a little insight through one of Google AI’s latest research papers, Deep Relevance Ranking Using Enhanced Document -Query Interactions.

In it, they explain the concept of Deep Relevance Ranking, or Ad-Hoc Retrieval.

It goes something like this: “Document relevance ranking, also known as ad-hoc retrieval… is the task of ranking documents from a large collection using the query and the text of each document only.”

The document is quick to point out that this is a step away from traditional ranking factors, as it doesn’t take factors like links into consideration.

In his Search Engine Journal article, Roger Monti points out that that Deep Relevance Ranking is, essentially, ranking pages that have already been ranked.

So a page would be ranked the traditional way – links and all – before the Deep Relevance Ranking kicks in to identify these “super synonyms.”

In that way, it’s less focused on traditional SEO staples like keywords and links, and more focused on relevance by matching search query’s to web pages, using only queries and web pages.

He also explains that while SERP rankings of a traditional search would be determined by page authority, link quality, keyword density, etc., this kind will surface results based, again, on how well the query fits the actual words on the web page.

What that means for marketers is that a clear focus on user intent and outstanding content is becoming even more important.

For some, it could represent an opportunity, as search results may move away from links and keywords to focus more on page content (but don’t go overhauling your strategy just yet!)

New Google AI Additions

Google’s not stopping there.

The search giant recently revealed its new approach to improving search, based on three major shifts:

- The shift from answers to journeys.

- The shift from queries to providing a queryless way to get information.

- The shift from text to a more visual way of finding information.

Here’s what you can expect from Google in the near future.

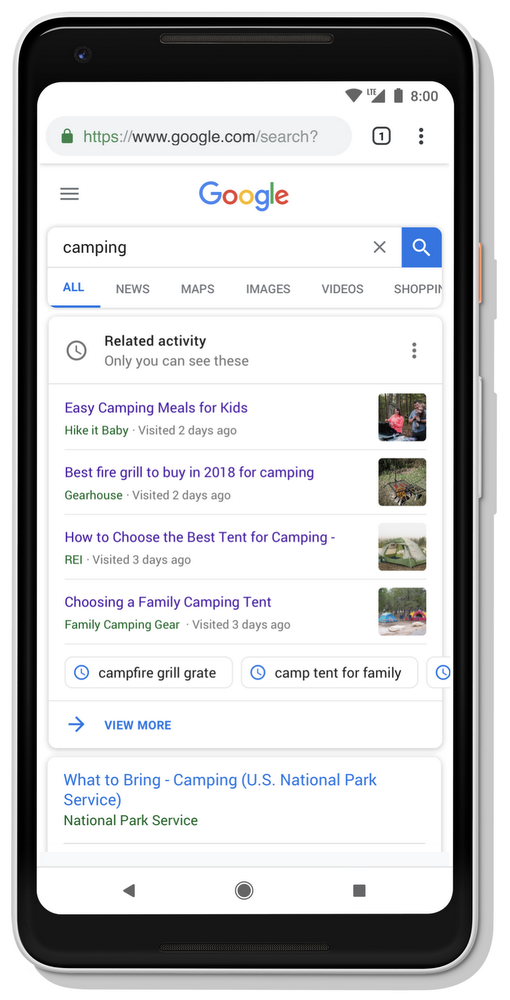

Google AI: Activity Cards

Google’s AI initiatives don’t stop there.

Next up: activity cards, designed to help people pick up where they left off in Google searches.

Google recognizes that not all searches can be completed with quick answers. Some, they say, can span several days.

In a recent post, Google gives the example of planning a vacation. Trip planning, we know, isn’t completed in a single Google search.

It can start months in advance as the traveler researches different places to go and things to do, before narrowing in on places to stay and how to get there.

Or, you might be someone who frequently needs “workout ideas” or “cheap restaurants near me.”

While in the past, you pretty much had to start at the beginning with each search, Google now has a way to help you resume a search where you left off – without having to sort through your search history.

Now, when you perform a search related to something you’ve searched in the past, Google will show you an Activity Card at the top of the SERPs.

Google AI: Activity Cards

This Activity Card will include relevant pages you’ve already visited and previous queries you’ve performed on the topic.

That way, you can effectively “retrace your steps” to find any sites you’ve already visited that may have been helpful (or avoid ones that weren’t).

Note that this card won’t appear for every search – only when Google “intelligently” feels it’s useful.

Beyond that, you’re still in control.

You can remove any results you don’t want to see from your history, pause the card, or remove it altogether.

Look for the card in your search results later this year.

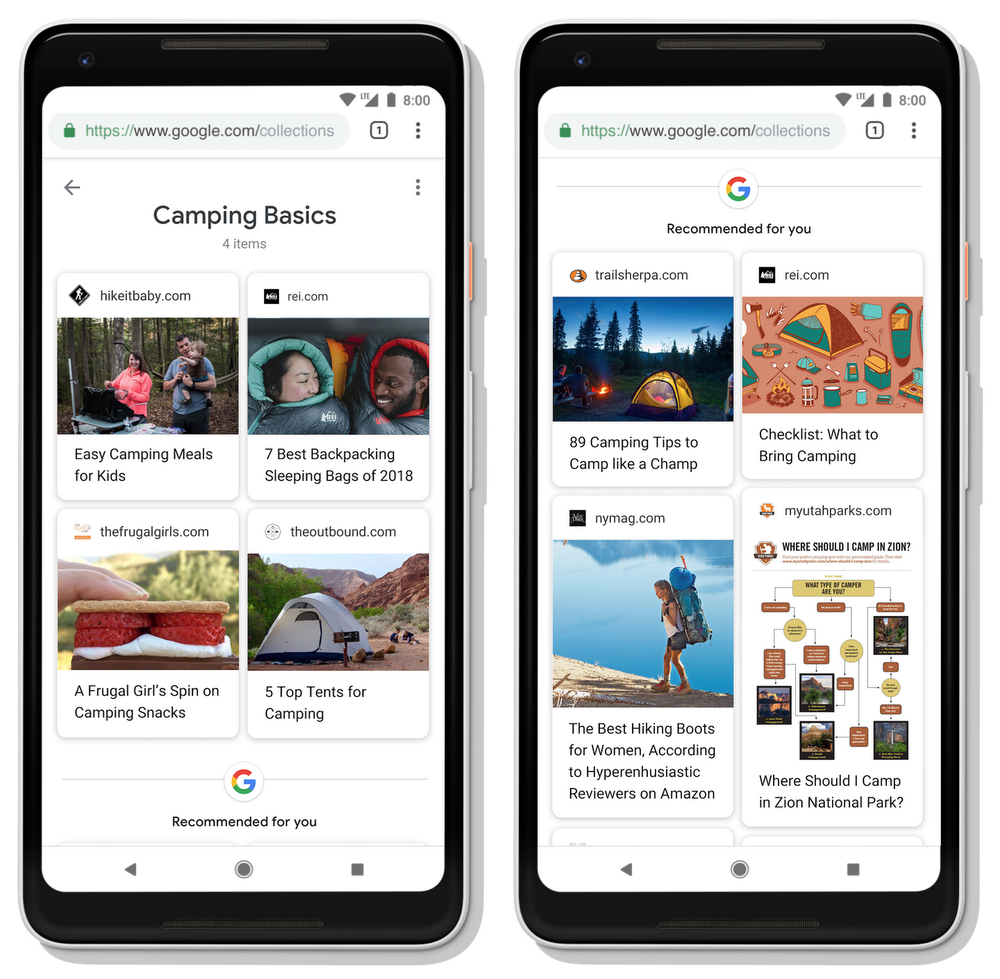

Google AI: Collections

In another attempt to help users search more efficiently, Google’s also introducing Collections.

These are kind of like Bookmarks, only they’ll appear in your search results.

So as you search, you can add relevant content to your Collections. These can be websites or individual articles or images.

They’ll appear as separate tabs by subject, which you can then click on to expand all results in that particular collection.

Google AI: Collections

Kind of like Pinterest boards, if you will.

Google will also suggest you make Collections based on your findings.

These used to be available for Google+ (RIP), but will now be available to all search engine users later this year.

Google AI: Dynamic Search Results

Next up in the Google and AI evolution: dynamic organization of search results.

Again, Google wants to help your search easier, even if you don’t know exactly what it is you’re searching for.

You may sometimes find that when you search, you don’t have a clear path for what to search next.

You’ll start out broad with something like “what is ai,” but with complicated subjects especially, it’s not always clear where to go from there.

So Google’s helping you out.

With its dynamic organization, Google will help you determine what to search for next by showing subtopics most relevant to what you’re searching for.

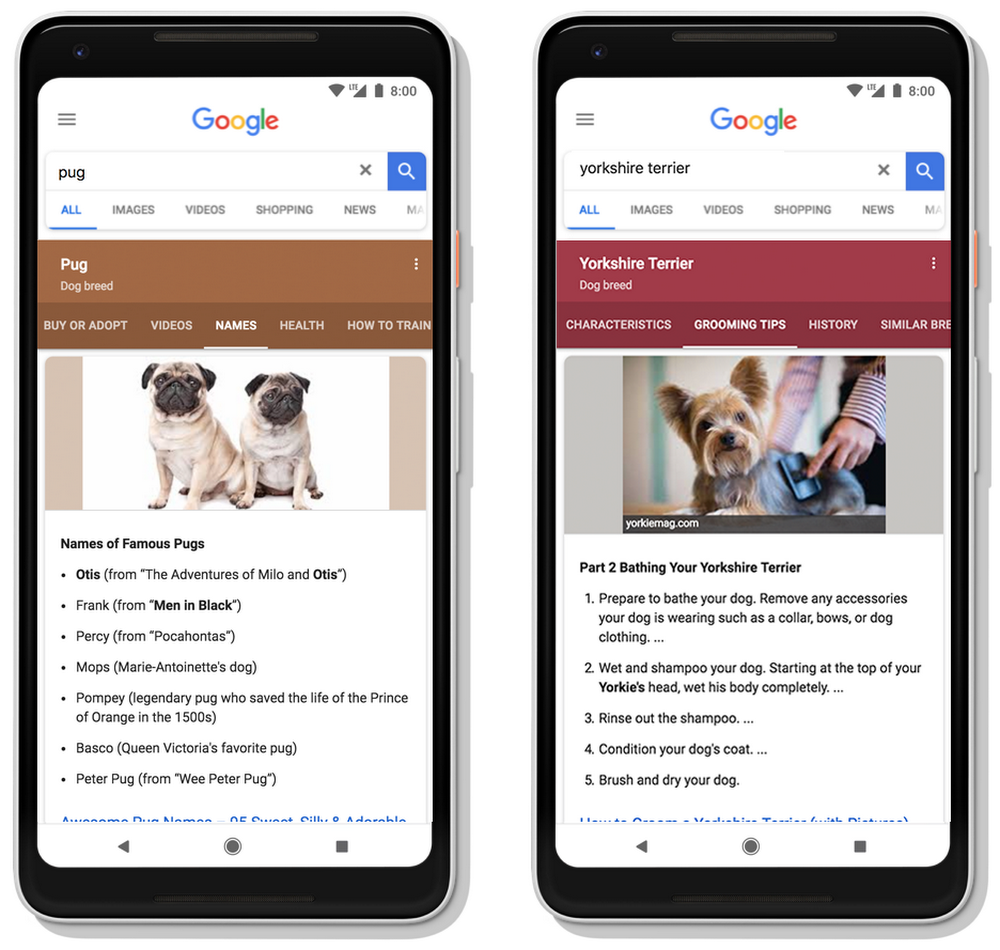

For example, dogs.

Type in a search for “pugs,” and Google will show you a tab at the top of the screen with subtopics like “names,” “how to train,” “health,” etc.

But search for another breed, and you’ll get different tabs. A “Yorkshire Terrier” search might show tabs like “grooming tips.”

Google AI: dynamic organization in search results

Grooming a Yorkshire Terrier, of course, is a bit more involved than grooming a pug, so Google realizes that it’s a more relevant follow-up search.

And – because we’re dealing with AI here – the feature will continue to learn over time.

As new information is published and more searches are conducted, Google will update its tab results to better reflect the most relevant information.

You can catch this one live on some searches already, and Google will continue to expand.

Google AI: Discover

This one’s more of a makeover than a new feature, with some notable AI additions.

You may be familiar with the Google Feed.

Like most digital Feeds (think Facebook, Instagram, etc.), it’s designed to show you relevant content, even if you aren’t searching at that particular time.

Introduced just last year, the Feed quickly grew to over 800 million monthly users.

And Google has big plans for it.

Renamed Discover, the new incarnation comes with a complete redesign to make it even easier to find the content you’re interested in.

Google AI: Discover

The new design comes with topic headers and a new Discover icon.

The cool thing about this icon? You’ll soon start seeing it in search results.

If you like what you see, you can tap the Follow button so Google will know to show you more information about that topic in the future.

It’s also shifting its focus to a more visual approach, and users will start seeing more photos and videos in their Discover feeds, as well as an emphasis on evergreen content.

Using AI, Discover can even gather information based on your experience level or understanding of a topic.

So if you’re a guitar player, Google can predict your level of expertise and return results suitable for a novice or expert.

Google AI Discover Feed

If the content isn’t what you want, you can indicate you want less of it. Or more, if it strikes your fancy.

Google also wants you to know that it’s taking an unbiased approach to news in Discover: it will use the same technology used in Full Coverage inGoogle News to offer takes from all perspectives.

Discover is coming soon to google.com on all mobile browsers.

Google AI: Visual Content

You may remember the launch of AMP Stories earlier this year.

Designed to add Google’s fast-loading AMP tech to visual content, they primarily give creators an easier, fast way to produce visually-immersive content.

Now, Google’s giving them the AI treatment.

Google has this to say:

“We’re beginning to use AI to intelligently construct AMP stories and surface this content in Search. We’re starting today with stories about notable people—like celebrities and athletes—providing a glimpse into facts and important moments from their lives in a rich, visual format. This format lets you easily tap to the articles for more information and provides a new way to discover content from the web.”

Additionally, Google is now better to understand the actual content in a video, allowing the search engine to provide users with more relevant video results.

So, if Google sees a video featuring a national park, it won’t just know that it’s a video about a national park.

It will know if it’s focused on certain major landmarks, campsites, or wildlife. Then, if you search for a specific landmark, or even “major landmarks,” it can give you a more accurate video result.

Google AI can better understand the contents of a video

With this technology, Google is coming closer to providing results as accurate as what it can pull up in the text results.

Which means the days of a primarily visual web are that much closer.

Google’s also placing emphasis on visual journeys.

What’s a visual journey? It’s a search that doesn’t stop with a single image.

Often when we search, we want information and context with an image, not a standalone picture.

So Google’s starting to take the actual webpage where the image lives into higher consideration.

Google notes that “over the last year, we’ve overhauled the Google Images algorithm to rank results that have both great images and great content on the page. For starters, the authority of a web page is now a more important signal in the ranking. If you’re doing a search for DIY shelving, the site behind the image is now more likely to be a site related to DIY projects.”

Google also says that fresher content will be prioritized in the results.

Additionally, it’s prioritizing sites with easier-to-find images. That means images that are central to the page and higher up.

You’ll also start seeing image captions and website URLs pop up, so users can better determine if it’s a website they really want to visit.

And lastly, Google is doing more with Google Lens.

Until now, it was used by individuals as they took photos to find out more about what they captured in the photos – different landmarks, outfit items, etc.

Now, Google’s bring Google Lens to the image results. With it, you can further explore preselected parts of a photo and products or objects in the photo, along with any associated product pages.

You can also manually draw on a part of a photo to find more information specific information.

Wrapping Up Google AI

It’s pretty clear that the future of search lies in AI – and businesses ability to adapt.

From the new neural matching algorithm to the emphasis on visual search, Google’s changing the way it approaches and delivers search results.