You have no idea how to rank in voice search until you read the actual quality rater guidelines.

In this article, I go through each guideline piece by piece. By the end, you’ll have a deep understand of how to rank in voice search.

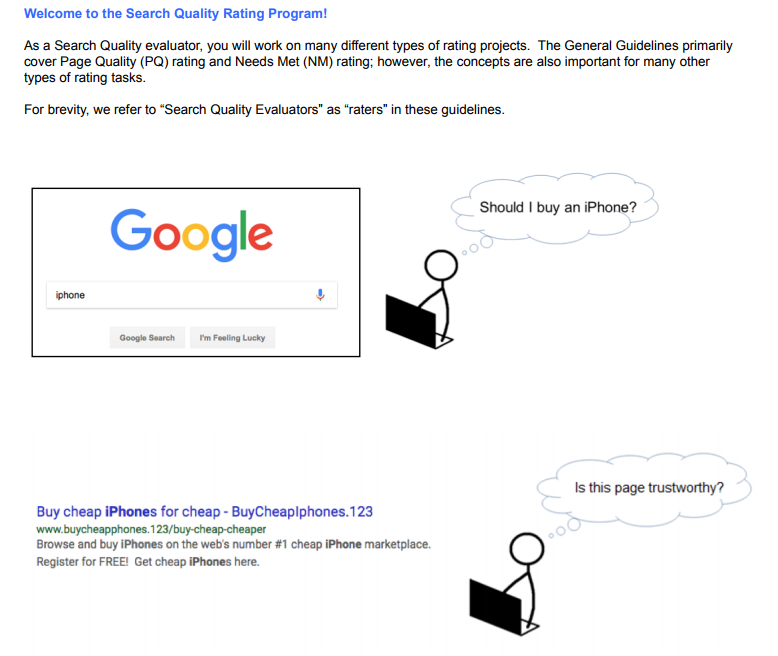

Search quality raters are humans who conduct real searches. Then, they inform Google about the quality of the search results.

If the raters give a single search result poor marks, then you can expect Google to demote that URL in the search engine results pages (SERPs). Likewise, if they give a single search result very high marks, then Google will likely promote it.

Basically, quality raters are the human component of the search algorithm.

Some of those quality raters have been asked to evaluate voice search results. You need to know about the criteria those folks are using to determine quality.

In this article, we’ll go over what you need to know about the voice search quality rater guidelines.

Why Voice Search Raters?

Why does Google evaluate voice search differently from traditional, browser-based search? Because voice search results are “eyes-free.”

In other words, when you use Google Assistant, Siri, Alexa, or some other voice-driven device to perform a search, you won’t see the results. You’ll hear them.

That’s a totally different means of communication than what you’re accustomed to with browser-based search.

It’s usually the case that a picture is worth a thousand words, but you often aren’t going to get any pictures when Alexa answers your question. You need an answer that can be delivered verbally.

As a result, Google uses a different set of criteria to evaluate quality in voice answers versus browser-based answers.

That’s why the Big G has hired a number of voice search quality raters. Those people use different devices to ask questions. Then, they tell Google what they think of the answers.

Guidelines for Voice Search Raters

Google didn’t just tell their raters to do some searches and then report back on the results based on their own opinions. Instead, the company developed a set of guidelines that they need to use when evaluating quality.

Fortunately, those guidelines are available online. Even better: you can use them to evaluate the quality of voice search results from your own website.

That’s important because you can (and should) think of the raters’ feedback as a search signal. In other words, the more that raters like what they get from your site, the better it will perform in the SERPs.

Two Categories of Voice Search Raters

The guidelines tell raters to evaluate the quality of voice search results based on two categories: information satisfaction and speech quality.

Information satisfaction evaluates the response in terms of giving the user what he or she is looking for. It’s all about accuracy.

There are two different types of responses to evaluate: answer responses and action responses.

Answer responses, as the name implies, answer a question (“Who is Marie Osmond?”). Action responses perform a service (“Play Paper Roses by Marie Osmond”).

Speech quality disregards factual accuracy and instead looks at the way that the answer was delivered. It relies on three separate criteria: length, formulation, and elocution.

Let’s look at all of these criteria in some detail.

Answer Response Quality

We’ll start with answer responses.

When users ask a question or blurt out a name, they’re going to expect a reply that gives them the information they’re looking for. That means the response should not only address their specific query, but also offer factually accurate detail.

Raters are asked to score the response in terms of how well it meets their needs. Possible ratings include:

- Fully meets

- Highly meets

- Moderately meets

- Slightly meets

- Fails to meet

Quality raters can also opt for a “between” rating, such as “Moderately meets to slightly meets.”

The guidelines offer several examples of searches with responses and explain how raters should score each one of them.

For example, the query “How tall was Charles Darwin” returned the response “Charles Darwin stood about 5 feet, 11 ½ inches tall.”

Google advises raters to score that as “Fully Meets.” That makes perfect sense because the response answers the question.

In another example, the query “Who is president of the United States?” returns the response “According to [website name here], the president of the United States is the elected head of state of the United States.”

While that’s technically accurate, it’s probably not the answer that the user was looking for. That response is rated “Slightly meets.”

Finally, the query “will it rain this evening?” returns “I’m not sure how to help with that.”

Obviously, that gets a “Fails to meet” rating.

Action Responses

When users ask a voice search assistant to play a song or perform some other kind of similar service, they’re looking for an action response. Those responses need to get rated as well.

Google uses the exact same rating system for action responses as it does for answer responses.

In the guidelines, Google also provides a number of queries and responses with their respective ratings.

For example, the query “play Beethoven Ninth Symphony on Spotify” results in the assistant playing media. In this case, it’s Beethoven’s Ninth Symphony.

That response gets a “Fully meets” rating.

The query “play music video on my TV” results in the assistant playing a YouTube music video on the user’s television set that only contains the song and the lyrics.

That response earns a “Slightly meets” rating because it played an audio-only version of the song. When users ask to play a music video, they usually want the official music video from VEVO.

Finally, the query “play Mumford and Sons Reminder” returned a response asking the user to specify a reminder time.

That got a “Fails to meet” rating because the user wanted to play a song named “Reminder” and not set a reminder.

Length Speech Quality Rating

Now that we’ve covered the information satisfaction ratings for answer and action responses, let’s look at the speech quality ratings. We’ll start with the length rating.

The length rating answers the question: was the response too long, too short, or just right?

Remember, there is such a thing as too much information. That’s especially true when it comes to voice responses as users can easily lose attention while listening to a long-winded answer.

On the other hand, a response can be so short that it doesn’t contain sufficient detail.

The trick is to get it just right.

One query example in the guidelines asks “how far is Alpha Centauri from the sun?” The response to that is “Alpha Centauri is 4.367 light years from the Earth.”

Note that the answer doesn’t really address the question. The question asks how for Alpha Centauri is from the sun. It doesn’t ask how far it is from the Earth.

Remember, though, the speech quality ratings don’t cover accuracy. They cover the quality of the verbal reply itself.

In this case, that response earned on “OK” rating in terms of length. That’s as good as it gets.

In another example, the query “is a pregnancy test accurate” returned the response “On the website [website name here], they say: However, recent research indicates that if a woman has missed a period, then many home pregnancy tests are not sensitive enough and cannot diagnose pregnancy.”

That earned a rating of “A bit long.”

Why? A simple “no” would have sufficed.

The next way to evaluate speech quality is by looking at the formulation of the response itself.

Here, raters are answering the following questions: Was the response grammatically accurate? Was it formulated in a way that you would expect a native language speaker to talk? When the answer included an attribution, was the source of the content understandable?

In one example, the query “where did Einstein go to college?” returns the response “On the [website name here], they say Joseph Einstein is expected to attend Harvard in Fall 2017.”

Once again, the answer isn’t what the user was looking for. Also once again, speech quality ratings aren’t concerned with accuracy.

In this case, the response earned a “Good” rating for formulation. Even though the response didn’t address the intended question, it’s grammatically accurate and uses proper sourcing.

In another example, the query “what is the outer layer of your skin called?” got the response “According to the website [website name here]: called the epidermis, the outer layer, and called the dermis, the inner layer.”

That response earned a “Bad” rating for formulation. That’s because it wasn’t a complete sentence.

The final criteria in the speech quality rating is elocution.

When raters evaluate elocution, they’re answering these questions: How accurate was the pronunciation of each word? How natural was the tone of the voice? Was the speed of the response too fast, too slow, or just right?

Basically, raters are evaluating the conversational nature of the response.

In one example, the query “what does BMI stand for?” returns the response “Body Mass Index, according to Wikipedia: BMI stands for Body Mass Index and it’s becoming a universal to measure body ‘fatness’ even though it doesn’t actually measure body fat like using a caliper or underwater weighing.”

That earned a “Good” rating for elocution. All of the words were spoken properly and the response wasn’t delivered too fast.

In another example, the query “how old is Achilles during Iliad?” returns the following response: “According to [website name here]: In that movie about Troy, Achilles, is depicted as being 27 28th years old and Agamemnon is 26 27th years old. Hector is about 30 31th years old and Tiresias is 54 55th years old.”

That’s not only a long-winded answer, but it also doesn’t identify ages properly. People don’t usually say “28th” in front of “years old.” Also, “31th” should be “31st” if it’s going to be used at all.

Unsurprisingly, that response earned a “Bad” rating in terms of elocution.

Action Items

So now that you know how search quality raters evaluate voice responses, what can you do with that information? Quite a bit, actually.

First, put yourself in the position of a search quality rater and evaluate the answers delivered from your own website. Start by looking at information satisfaction and then move on to speech quality.

When you’re looking at information satisfaction, be sure that your responses are long enough to answer the question but not so long that people might have trouble following them.

In terms of speech quality, take note of the conversational tone of your website. Make sure the content doesn’t sound too academic or robotic. Also, check your content for grammatical errors.

Wrapping It Up Voice Search Quality Ratings

Your voice search optimization efforts aren’t complete until you’ve gone through the search quality rater guidelines. Make sure you understand what those raters are looking for in voice responses so that your site gains maximum exposure. That’s how you’ll build brand-name awareness and, ultimately, generate more revenue.